Using Cloud Disk CDS

Overview

The Cloud Container Engine (CCE) supports the use of Baidu AI Cloud’s Cloud Disk Server (CDS) by creating PV/PVC and mounting data volumes for workloads. This document will introduce how to dynamically and statically mount a cloud disk server in a cluster.

Usage restrictions

- Ensure the Kubernetes version of the cluster is 1.16 or above.

- Pods mounting CDS can only be deployed on nodes that support cloud disk server mounting.

- The number of CDS mounted on each node is determined by both the configuration parameters of the CCE CSI CDS Plugin component and the Baidu Cloud Compute itself. For more information, see Create Server Instance.

- Currently, cloud disks only support pay-as-you-go mode and cannot be created under subscription mode.

Prerequisites

- The cluster has installed block storage service components. For more information, please refer to CCE CSI CDS Plugin Description.

Operation steps

There are two ways to use CDS via PV/PVC:

| Method | Description | Note |

|---|---|---|

| Static mounting | Users must pre-create CDS on Baidu AI Cloud (refer to the CDS documentation for instructions) and then use the CDS volume ID to create PV and PVC resources within the cluster. |

|

| Dynamic mounting | When a PVC is declared in the cluster, a pay-as-you-go CDS disk is automatically created and dynamically linked to the PV. |

|

Dynamically mount the cloud disk service

Method I: Operation via kubectl command line

1. Create a StorageClass

- Cluster administrators can define various storage classes for the cluster using StorageClass. Storage resources can be dynamically created through StorageClass in combination with PVC.

- This guide explains how to use Kubectl to create a StorageClass for Cloud Disk Server (CDS) and customize the necessary templates for block storage use.

1apiVersion: storage.k8s.io/v1

2kind: StorageClass

3metadata:

4 Name: hp1 #StorageClass name

5provisioner: csi-cdsplugin

6allowVolumeExpansion: true

7volumeBindingMode: WaitForFirstConsumer

8parameters:

9 paymentTiming: "Postpaid" # payment method, Postpaid means pay-as-you-go, currently only supports pay-as-you-go

10 storageType: "hp1" #Disk type, supports cloud_cp1, hp1, hdd

11 recycle: "on" #Recycling policy for cloud disk server deletion, supporting on and off (on means that the cloud disk server will be moved to the recycle bin when deleted; off means that the cloud disk server will not be moved to the recycle bin when deleted). This takes effect only when reclaimPolicy is set to Delete, with a default value of on

12 reclaimPolicy: Delete #Deletion policy for cloud disk servers, supporting Delete and Retain (Delete means that when the PVC is deleted, the PV and cloud disk server will be deleted together, and the deleted cloud disk server will be moved to the recycle bin by default; retain means that when the PVC is deleted, the PV and cloud disk server will not be deleted and require manual deletion by you), with a default value of deleteDescription:

- storageType is used to set the type of dynamically created CDS disks, supporting: cloud_hp1 (general-purpose SSD), hp1 (high-performance cloud disk), and hdd (general-purpose HDD). For more information, refer to Create CDS Cloud Disk.

- If Pods in the cluster use CDS in dynamic mode, and the reclaimPolicy in the corresponding StorageClass is set to 'delete', the CDS disks will be retained by default when the cluster is deleted. To delete them along with the cluster, manually stop the relevant Pods and remove the corresponding PVCs before initiating cluster deletion.

2. Create a persistent volume claim (PVC)

Note: The storageClassName must be set to the name of the storage class that was specified during the deployment of the referenced storage class.

1kind: PersistentVolumeClaim

2apiVersion: v1

3metadata:

4 name: csi-pvc-cds

5spec:

6 accessModes:

7 - ReadWriteOnce

8 storageClassName: hp1

9 resources:

10 requests:

11 storage: 5 Gi # Specify the PVC storage space sizeNote:Different disk types have different storage sizes. For example: hp1: 5 - 32,765 GB, cloud_hp1: 50 - 32,765 GB, hdd: 5 - 32,765 GB.

3. Check that the PVC status is bound

1$ kubectl get pvc csi-pvc-cds

2NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

3csi-pvc-cds Bound pvc-1ab36e4d1d2711e9 50Gi RWX hp1 4sNote:

If volumeBindingMode is specified as

WaitForFirstConsumer, the PVC will be in the pending status at this time and will wait until the first Pod that mounts this PVC is created before entering the PV creation and binding process.

4. Mount the PVC in the Pod

The Pod and PVC must reside within the same namespace.

1apiVersion: v1

2kind: Pod

3metadata:

4 name: test-pvc-pod

5 labels:

6 app: test-pvc-pod

7spec:

8 containers:

9 - name: test-pvc-pod

10 image: nginx

11 volumeMounts:

12 - name: cds-pvc

13 mountPath: "/cds-volume"

14 volumes:

15 - name: cds-pvc

16 persistentVolumeClaim:

17 claimName: csi-pvc-cdsOnce the Pod is created, you can access the corresponding CDS storage by reading and writing to the /cds-volume path in the container.

Method II: Operation via console

- Log in to the CCE console and click on the cluster name to navigate to the Cluster Details page.

- Create a new StorageClass.

a. In the left navigation bar, select Storage Configuration - Storage Class to enter the Storage Class List page.

b. Click Create Storage Class at the top of the storage class list. Customize relevant configurations in the File Template. storageType is used to set the type of dynamically created CDS disks, supporting: cloud_hp1 (general-purpose SSD), hp1 (high-performance cloud disk), and hdd (general-purpose HDD).

b. Click OK to create the storage class.

- Create a Persistent Volume Claim (PVC).

a. In the left navigation bar, select Storage Configuration - Persistent Volume Claims to enter the persistent volume claim list.

b. Click the Create Newly Persistent Volume Claim button at the top of the list, and select Form Creation or YAML Creation.

c. If Form Creation is selected, fill in the relevant parameters. For details on the three access modes, refer to Storage Management Overview. After clicking OK, enter the value of storageClassName (i.e., the name of the storage class) in the Confirm Configuration pop-up window. Click OK after the configuration is correct.

d. After creating the PVC, you can see in Persistent Volume Claims List - Persistent Volumes Column that the corresponding PV is automatically created, and the PVC status column shows "bound", indicating that the PVC has been bound to the newly created PV.

- Create an application, mount the PVC, specify the associated PVC name in the Pod specification, and ensure that the Pod and PVC are in the same namespace. Once the Pod is created, you can access content on the corresponding CDS storage by reading and writing to the

/cds-volumepath in the container.

Statically mount the cloud disk service

Method I: Operation via kubectl command line

1. Create a persistent volume (PV)

1apiVersion: v1

2kind: PersistentVolume

3metadata:

4 name: pv-cds

5 namespace: "default"

6spec:

7 accessModes:

8 - ReadWriteOnce

9 capacity:

10 storage: 5Gi

11 csi:

12 driver: "csi-cdsplugin"

13 volumeHandle: "v-xxxx" # Specify the disk to be mounted, in the format of disk ID

14 fsType: "ext4"

15 nodeAffinity:

16 required:

17 nodeSelectorTerms:

18 - matchExpressions:

19 - key: failure-domain.beta.kubernetes.io/zone

20 operator: In

21 values:

22 - zoneA

23 persistentVolumeReclaimPolicy: RetainNote:

- If

fsTypeis not provided, the default value will beext4. In Filesystem mode, mounting will fail iffsTypedoesn’t match the actual file system type in CDS. If no file system exists in CDS, it will automatically be formatted to the file system type specified byfsTypeduring mounting.- In

nodeAffinity, ensure that the availability zone where the CDS is located is specified to guarantee that the Pod mounting the CDS is only scheduled to nodes within the same availability zone as the CDS disk.

2. Create a persistent volume claim (PVC)

1apiVersion: v1

2kind: PersistentVolumeClaim

3metadata:

4 name: csi-cds-pvc

5spec:

6 accessModes:

7 - ReadWriteOnce

8 resources:

9 requests:

10 storage: 5Gi3. Check that the PVC status is bound and bound to the corresponding PV

1$ kubectl get pv

2NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

3pv-cds 5Gi RWO Retain Bound default/csi-cds-pvc 4s

4$ kubectl get pvc

5NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

6csi-cds-pvc Bound pv-cds 5Gi RWO 36s4. Mount the PVC in the Pod

1apiVersion: apps/v1

2kind: Deployment

3metadata:

4 name: web-server-deployment

5 labels:

6 test: ws

7spec:

8 replicas: 1

9 selector:

10 matchLabels:

11 se: ws

12 template:

13 metadata:

14 labels:

15 se: ws

16 name: web-server

17 spec:

18 containers:

19 - name: web-server

20 image: nginx

21 volumeMounts:

22 - mountPath: /var/lib/www/html

23 name: csi-cds-pvc

24 volumes:

25 - name: csi-cds-pvc

26 persistentVolumeClaim:

27 claimName: csi-cds-pvc After the Pod is created, you can access content on the corresponding CDS storage by reading and writing to the /var/lib/www/html path in the container.

As only the ReadWriteOnce access mode is supported when creating PV and PVC, this PVC can be mounted and used for read/write operations by all Pods on the same node.

Method II: Operation via console

- Log in to the CCE console and click on the cluster name to view the cluster details.

- Create a Persistent Volume (PV).

a. In the left navigation bar, select Storage Configuration - Persistent Volume to enter the persistent volume list.

b. Click the Create Newly Persistent Volume button at the top of the persistent volume list, and select Form Creation or YAML Creation.

c. If Form Creation is selected, fill in the relevant parameters, set the storage usage as needed, and select Cloud Disk Server (CDS) for the storage class, and enter the cloud disk ID. Click OK, then confirm the configuration in the second pop-up window, and click OK to create the PV. The cloud disk ID can be viewed in the CDS Console.

- Create a Persistent Volume Claim (PVC) that can be bound to a Persistent Volume (PV).

a. In the left navigation bar, select Storage Configuration - Persistent Volume Claims to enter the persistent volume claim list.

b. Click the Create Newly Persistent Volume Claim button at the top of the persistent volume list, and select Form Creation or YAML Creation.

c. Configure the storage usage, access mode and storage class (optional) of the PVC according to the previously created PV, then click OK to create the PVC. The system will search for existing PV resources to find a PV that matches the PVC request.

Once bound, you can see the status columns of the PV and PVC change to "Bound" in the Persistent Volume list and Persistent Volume Claim list, respectively.

- Deploy an application, mount the PVC, and specify the corresponding PVC name in the Pod specification.

Application scenarios

Batch using PVCs via claimTemplate in StatefulSet

- Create a 2-replica StatefulSet and specify volumeClaimTemplates. You need to create the corresponding StorageClass in advance:

1apiVersion: apps/v1

2kind: StatefulSet

3metadata:

4 name: web

5spec:

6 serviceName: "nginx"

7 replicas: 2

8 selector:

9 matchLabels:

10 app: nginx

11 template:

12 metadata:

13 labels:

14 app: nginx

15 spec:

16 containers:

17 - name: nginx

18 image: nginx

19 ports:

20 - containerPort: 80

21 name: web

22 volumeMounts:

23 - name: www

24 mountPath: /usr/share/nginx/html

25 volumeClaimTemplates:

26 - metadata:

27 name: www

28 spec:

29 accessModes: [ "ReadWriteOnce" ]

30 resources:

31 requests:

32 storage: 5Gi

33 storageClassName: hp1- Inspect the Pods and the automatically created PVCs.

1$ kubectl get pod

2NAME READY STATUS RESTARTS AGE

3web-0 1/1 Running 0 3m

4web-1 1/1 Running 0 2m1$ kubectl get pvc

2NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

3www-web-0 Bound pvc-a1e885701d2f11e9 5Gi RWO hp1 6m

4www-web-1 Bound pvc-c91edb891d2f11e9 5Gi RWO hp1 5mMount CDS in a multi-availability zone cluster

Currently, CDS does not support operations across availability zones. If a K8S Cluster (CCE) has nodes in multiple availability zones, for dynamic mounting, set the Volume Binding Mode of the StorageClass to WaitForFirstConsumer to allow CDS disks to be automatically created based on the availability zone of the node where the Pod is scheduled. Alternatively, specify the availability zone through the allowedTopologies field in the StorageClass or the node affinity attribute of the Pod. When using an existing CDS, the nodeAffinity parameter of the PV must be configured to ensure proper availability zone matching.

K8S Cluster CCE automatically adds availability zone labels to cluster nodes by default:

1$ kubectl get nodes --show-labels

2NAME STATUS ROLES AGE VERSION LABELS

3192.168.80.15 Ready <none> 13d v1.8.12 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/instance-type=BCC,beta.kubernetes.io/os=linux,failure-domain.beta.kubernetes.io/region=bj,failure-domain.beta.kubernetes.io/zone=zoneC,kubernetes.io/hostname=192.168.80.15 The label failure-domain.beta.kubernetes.io/zone=zoneC indicates that the cluster node is located in availability zone C.

Method 1: Set the Volume Binding Mode of the StorageClass

To address this issue, set volumeBindingMode to WaitForFirstConsumer. With this setting, the Pod is scheduled first, and a CDS disk is then created in the availability zone corresponding to the scheduling result.

Usage example

- Create a StorageClass and specify

volumeBindingModeasWaitForFirstConsumer.

1apiVersion: storage.k8s.io/v1

2kind: StorageClass

3metadata:

4 name: csi-cds

5provisioner: csi-cdsplugin

6allowVolumeExpansion: true

7volumeBindingMode: WaitForFirstConsumer

8parameters:

9 paymentTiming: "Postpaid"

10 storageType: "hp1"

11reclaimPolicy: Delete- Use PVCs via claimTemplate in StatefulSet:

1apiVersion: apps/v1

2kind: StatefulSet

3metadata:

4 name: web

5spec:

6 serviceName: "nginx"

7 replicas: 1

8 selector:

9 matchLabels:

10 app: nginx

11 template:

12 metadata:

13 labels:

14 app: nginx

15 spec:

16 containers:

17 - name: nginx

18 image: nginx

19 ports:

20 - containerPort: 80

21 name: web

22 volumeMounts:

23 - name: www

24 mountPath: /usr/share/nginx/html

25 volumeClaimTemplates:

26 - metadata:

27 name: www

28 spec:

29 accessModes: [ "ReadWriteOnce" ]

30 resources:

31 requests:

32 storage: 5Gi

33 storageClassName: csi-cdsMethod 2: Forcedly specify zone via the allowedTopologies field of the StorageClass

- Create a StorageClass and configure

allowedTopologiesas the parameter.

1apiVersion: storage.k8s.io/v1

2kind: StorageClass

3metadata:

4 name: hp1-zonec

5provisioner: csi-cdsplugin

6parameters:

7 paymentTiming: "Postpaid"

8 storageType: "hp1"

9reclaimPolicy: Delete

10volumeBindingMode: WaitForFirstConsumer

11allowedTopologies:

12- matchLabelExpressions:

13 - key: failure-domain.beta.kubernetes.io/zone

14 values:

15 - zoneC- Use PVCs via claimTemplate in StatefulSet:

1apiVersion: apps/v1

2kind: StatefulSet

3metadata:

4 name: sts-multi-zone

5spec:

6 serviceName: "nginx"

7 replicas: 1

8 selector:

9 matchLabels:

10 app: nginx

11 template:

12 metadata:

13 labels:

14 app: nginx

15 spec:

16 containers:

17 - name: nginx

18 image: nginx

19 ports:

20 - containerPort: 80

21 name: web

22 volumeMounts:

23 - name: www

24 mountPath: /usr/share/nginx/html

25 volumeClaimTemplates:

26 - metadata:

27 name: www

28 spec:

29 accessModes: [ "ReadWriteOnce" ]

30 resources:

31 requests:

32 storage: 40Gi

33 storageClassName: hp1-zonecExpand CDS PV

Expanding a CDS PV requires a Kubernetes cluster version of 1.16 or higher and is only applicable to dynamically created CDS PVs.

- Create a storageClass and add the

allowVolumeExpansion: trueconfiguration

1apiVersion: storage.k8s.io/v1

2kind: StorageClass

3metadata:

4 name: hp1

5 allowVolumeExpansion: true #Allow disk expansion

6...- Modify the disk capacity in

spec.resources.requestsof the PVC bound to the PV to the target capacity to trigger expansion

1kind: PersistentVolumeClaim

2apiVersion: v1

3metadata:

4 name: csi-pvc-cds

5spec:

6 accessModes:

7 - ReadWriteOnce

8 storageClassName: hp1

9 resources:

10 requests:

11 storage: 8 Gi #Modify to the target disk size-

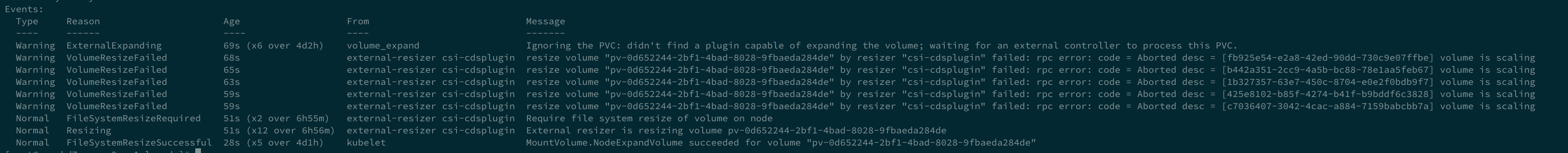

By default, the CDS CSI plugin only supports offline expansion, meaning that the actual expansion operation will be performed only when the PVC is not mounted by any Pod. After modifying the PVC capacity request, if the PVC is not mounted by any Pod at this time, the CDS disk expansion operation will be triggered; otherwise, the expansion will start after the Pods mounting the PVC are stopped. The specific expansion progress can be viewed through the corresponding events using

kubectl describe pvc <pvcName>.

Note:

- If you need to perform online expansion while the PVC is mounted by a Pod, you can enable the online expansion function by running

kubectl -n kube-system edit deployment csi-cdsplugin-controller-serverand adding the startup parameter--enable-online-expansionto thecsi-cdsplugin` container.- However, performing an online expansion under high load may lead to IO performance degradation or IO errors. It is recommended to carry out the operation during periods of low load and to create snapshot backups beforehand.