Create Mxnet Task

Updated at:2025-10-27

Create a new task of type Mxnet.

Prerequisites

- You have successfully installed the CCE AI Job Scheduler and CCE Deep Learning Frameworks Operator components; without these, the cloud-native AI features will be unavailable.

- As an IAM user, you can only use a queue to create new tasks if you are part of the users linked to that queue.

- When you install the CCE Deep Learning Frameworks Operator component, the Mxnet deep learning framework is automatically installed.

Operation steps

- Sign in to the Baidu AI Cloud official website and enter the management console.

- Go to Product Services - Cloud Native - Cloud Container Engine (CCE) to access the CCE management console.

- Click Cluster Management - Cluster List in the left navigation pane.

- Click on the target cluster name in the Cluster List page to navigate to the cluster management page.

- On the Cluster Management page, click Cloud-Native AI - Task Management.

- Click Create Task on the Task Management page.

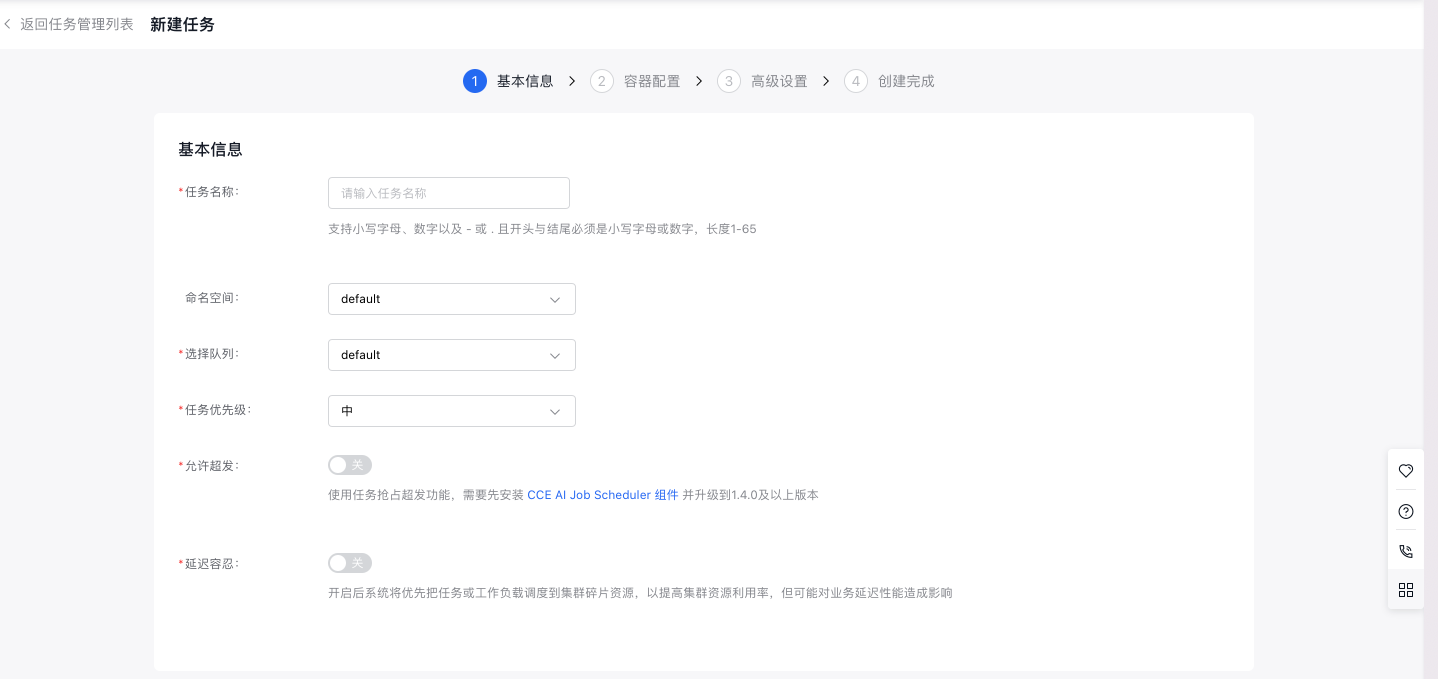

- On the Create Task page, configure basic task information:

- Specify a custom task name using lowercase letters, numbers, “-”, or “.”. The name must start and end with a lowercase letter or number and be 1-65 characters long.

- Namespace: Choose the namespace for the new task.

- Select queue: Choose the queue associated with the new task.

- Task priority: Set the priority level for the task.

- Allow overcommitment: Enable this option to use task preemption for overcommitment. The CCE AI Job Scheduler component must be installed and updated to version 1.4.0 or higher.

- Tolerance for delay: The system will prioritize scheduling tasks or workloads to fragmented cluster resources to enhance cluster resource utilization, though this might impact business latency performance.

- Configure basic code information:

- Code Configuration Type: Choose the method for code configuration, currently supporting "BOS File," "Local File Upload," "Git Code Repository," and "Not Configured Temporarily."

- Execution command: Define the command to execute the code.

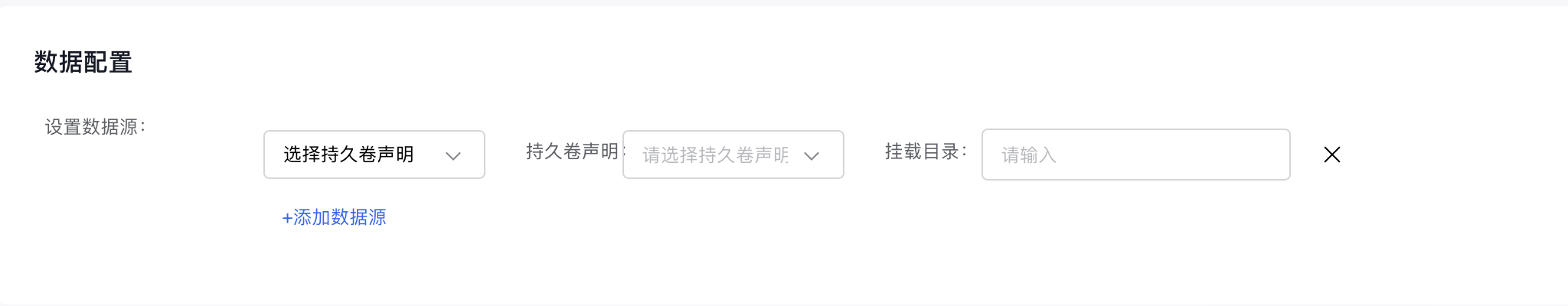

- Configure data-related information:

- Set data source: Currently supports datasets, persistent volume claims, temporary paths, and host paths. For datasets: All available datasets are listed; selecting a dataset automatically matches the PVC with the same name. For persistent volume claims: Choose the persistent volume claim directly.

- Click "Next" to proceed to container-related configurations.

- Configure task type information:

- Select framework: Choose Mxnet.

- Training method: Select either Single-Machine or Distributed training.

- Select Role: For "Single-machine" training, only "Worker" can be chosen. For "Distributed" training, additional roles such as "PS," "Chief," and "Evaluator" can be selected.

- Configure pod information (advanced settings are optional).

- Specify the number of pods desired in the pod.

- Define the restart policy for the pod. Options: “Restart on Failure” or “Never Restart”.

- Provide the address for pulling the container image. Alternatively, click Select Image to choose the desired image.

- Enter the image version. If left unspecified, the latest version will be used by default.

- Container Quota: Specify the CPU, memory, and GPU/NPU resource allocation for the container.

- Environment Variables: Enter the variable names and their corresponding values.

- Lifecycle: Includes start commands, parameters, actions after startup, and actions before stopping, all of which can be customized as needed.

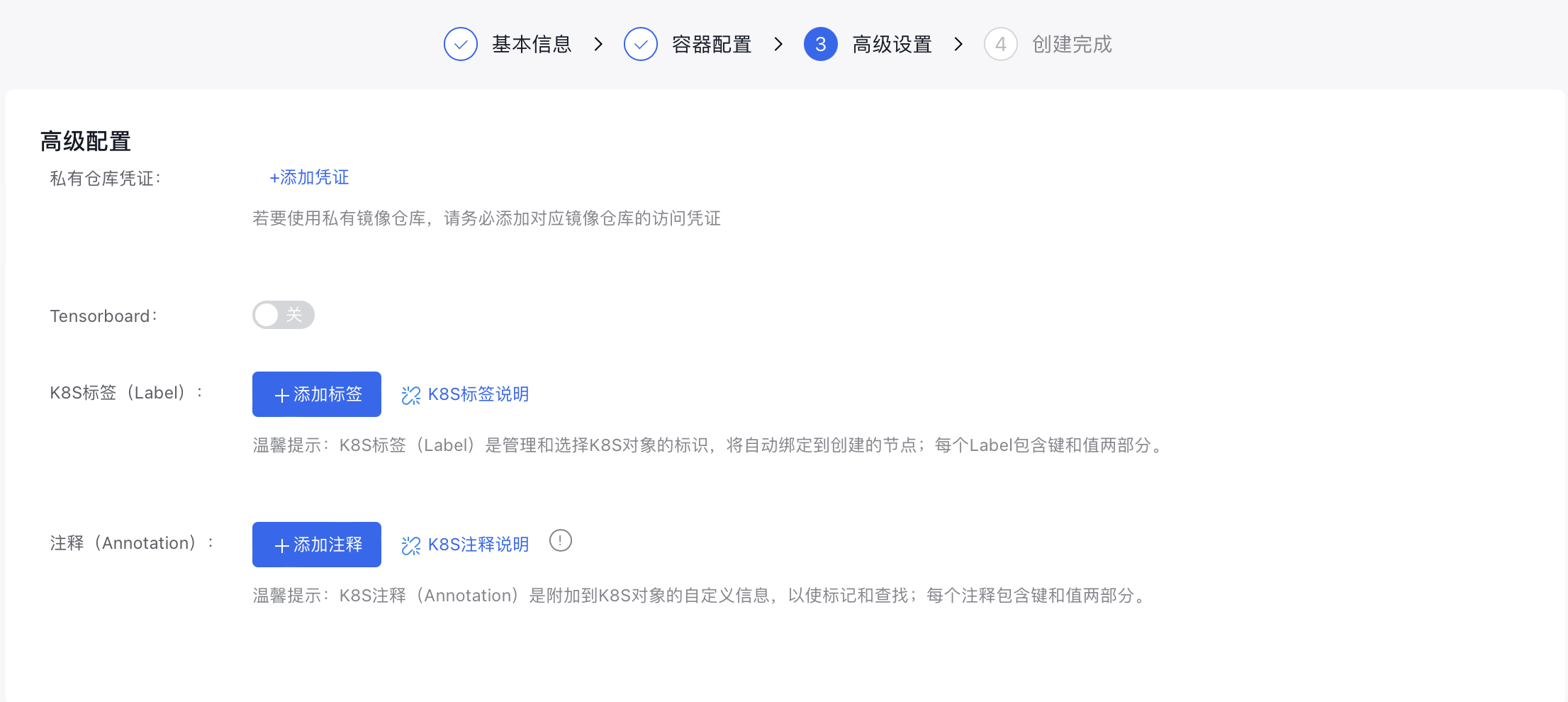

- Configure the advanced task settings.

- Add credentials to access the private image registry if using a private image.

- Tensorboard: If task visualization is required, the Tensorboard function can be enabled. After enabling, you need to specify the “Service Type” and “ Training Log Reading Path”.

- Assign K8s labels to the task.

- Provide annotations for the task.

- Click the Finish button to finalize task creation.

Example of creating a task with YAML

Plain Text

1apiVersion: "kubeflow.org/v1"

2kind: "MXJob"

3metadata:

4 name: "mxnet-job"

5spec:

6 jobMode: MXTrain

7 mxReplicaSpecs:

8 Scheduler:

9 replicas: 1

10 restartPolicy: Never

11 template:

12 metadata:

13 annotations:

14 sidecar.istio.io/inject: "false"

15 # if your libcuda.so.1 is in custom path, set the correct path with the following annotation

16 # kubernetes.io/baidu-cgpu.nvidia-driver-lib: /usr/lib64

17 spec:

18 schedulerName: volcano

19 containers:

20 - name: mxnet

21 image: registry.baidubce.com/cce-public/kubeflow/cce-public/mxjob/mxnet:gpu

22 resources:

23 limits:

24 baidu.com/v100_32g_cgpu: "1"

25 # for gpu core/memory isolation

26 baidu.com/v100_32g_cgpu_core: 5

27 baidu.com/v100_32g_cgpu_memory: "1"

28 # if gpu core isolation is enabled, set the following preStop hook for graceful shutdown.

29 # ${'`'}train_mnist.py${'`'} needs to be replaced with the name of your gpu process.

30 lifecycle:

31 preStop:

32 exec:

33 command: [

34 "/bin/sh", "-c",

35 "kill -10 ${'`'}ps -ef | grep train_mnist.py | grep -v grep | awk '{print $2}'${'`'} && sleep 1"

36 ]

37 Server:

38 replicas: 1

39 restartPolicy: Never

40 template:

41 metadata:

42 annotations:

43 sidecar.istio.io/inject: "false"

44 # if your libcuda.so.1 is in custom path, set the correct path with the following annotation

45 # kubernetes.io/baidu-cgpu.nvidia-driver-lib: /usr/lib64

46 spec:

47 schedulerName: volcano

48 containers:

49 - name: mxnet

50 image: registry.baidubce.com/cce-public/kubeflow/cce-public/mxjob/mxnet:gpu

51 resources:

52 limits:

53 baidu.com/v100_32g_cgpu: "1"

54 # for gpu core/memory isolation

55 baidu.com/v100_32g_cgpu_core: 5

56 baidu.com/v100_32g_cgpu_memory: "1"

57 # if gpu core isolation is enabled, set the following preStop hook for graceful shutdown.

58 # ${'`'}train_mnist.py${'`'} needs to be replaced with the name of your gpu process.

59 lifecycle:

60 preStop:

61 exec:

62 command: [

63 "/bin/sh", "-c",

64 "kill -10 ${'`'}ps -ef | grep train_mnist.py | grep -v grep | awk '{print $2}'${'`'} && sleep 1"

65 ]

66 Worker:

67 replicas: 1

68 restartPolicy: Never

69 template:

70 metadata:

71 annotations:

72 sidecar.istio.io/inject: "false"

73 # if your libcuda.so.1 is in custom path, set the correct path with the following annotation

74 # kubernetes.io/baidu-cgpu.nvidia-driver-lib: /usr/lib64

75 spec:

76 schedulerName: volcano

77 containers:

78 - name: mxnet

79 image: registry.baidubce.com/cce-public/kubeflow/cce-public/mxjob/mxnet:gpu

80 env:

81 # for gpu memory over request, set 0 to disable

82 - name: CGPU_MEM_ALLOCATOR_TYPE

83 value: “1”

84 command: ["python"]

85 args: [

86 "/incubator-mxnet/example/image-classification/train_mnist.py",

87 "--num-epochs","10","--num-layers","2","--kv-store","dist_device_sync","--gpus","0"

88 ]

89 resources:

90 requests:

91 cpu: 1

92 memory: 1Gi

93 limits:

94 baidu.com/v100_32g_cgpu: "1"

95 # for gpu core/memory isolation

96 baidu.com/v100_32g_cgpu_core: 20

97 baidu.com/v100_32g_cgpu_memory: "4"

98 # if gpu core isolation is enabled, set the following preStop hook for graceful shutdown.

99 # ${'`'}train_mnist.py${'`'} needs to be replaced with the name of your gpu process.

100 lifecycle:

101 preStop:

102 exec:

103 command: [

104 "/bin/sh", "-c",

105 "kill -10 ${'`'}ps -ef | grep train_mnist.py | grep -v grep | awk '{print $2}'${'`'} && sleep 1"

106 ]