Ascend node resource

Updated at:2025-10-27

Prerequisites

- The CCE Ascend MindX DL component has been installed

- Accessed monitoring instances

- Collection tasks need to be enabled. For details, refer to the document: Access Monitoring Instance and Enable Collection Tasks

Application method

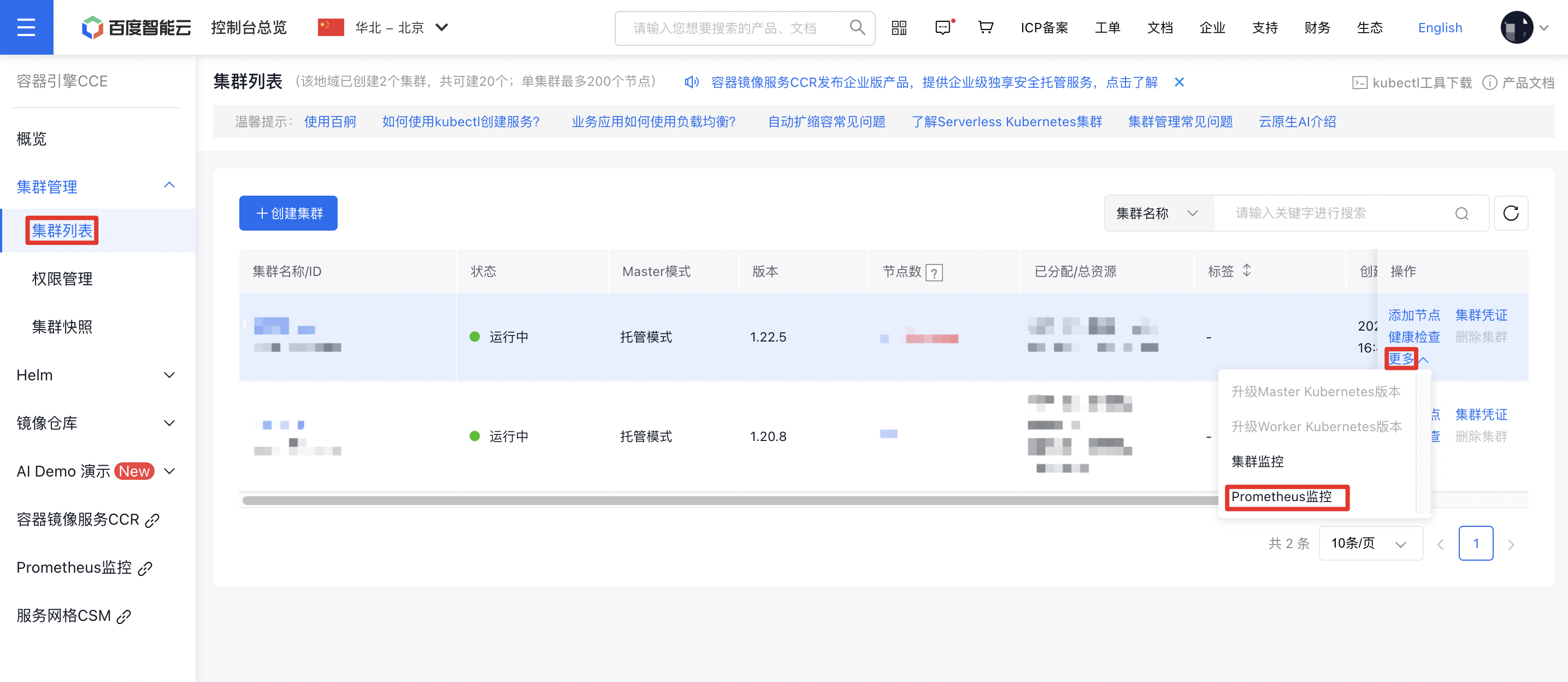

- Sign in to Cloud Container Engine Console (CCE).

- Click Cluster Management on the left sidebar. In the Cluster List, select the Cluster Name you need. Under Actions - More on the right, click Prometheus Monitoring to navigate to the Prometheus Monitoring Service.

- In the options at the bottom of the Prometheus Monitoring Page, select Cloud-Native AI Monitoring, then select Ascend Node Resources.

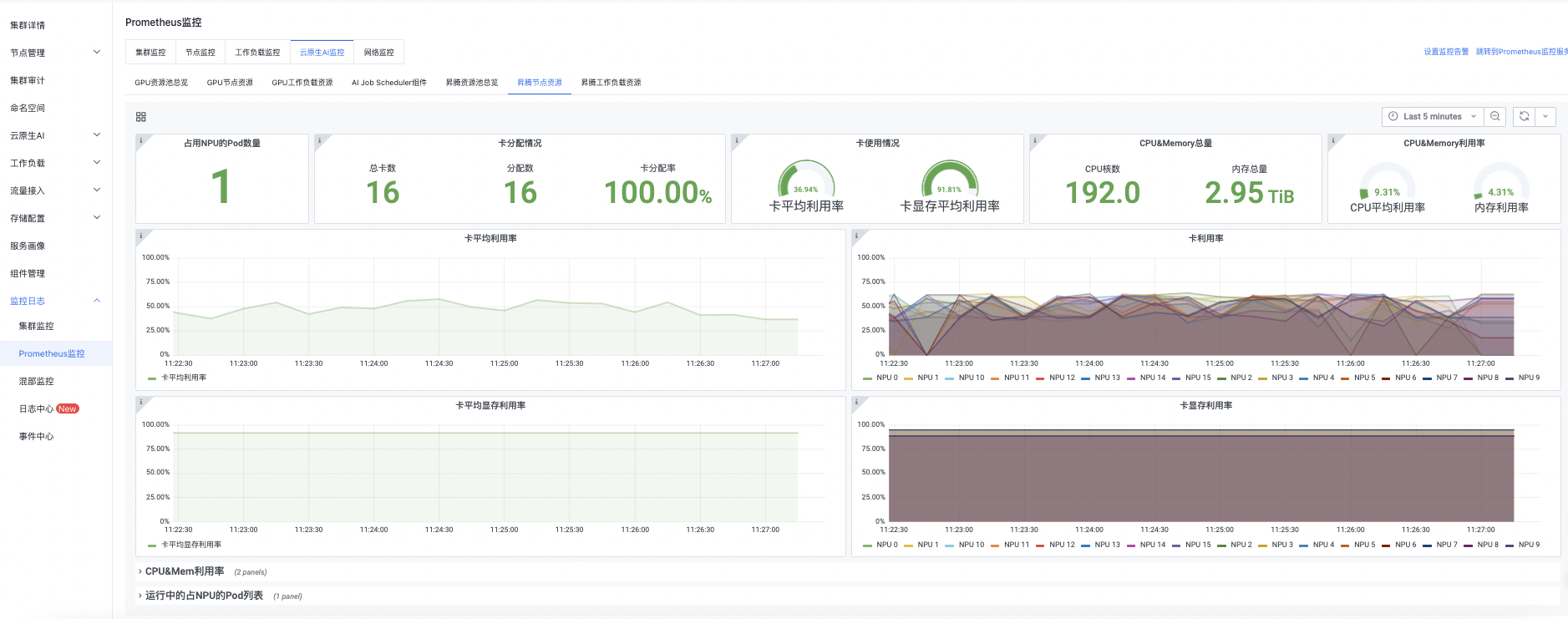

Ascend node resources are shown as follows

You can click the button in the upper right corner to set monitoring time, manual refresh, and automatic refresh by yourself.

Detailed description of Ascend node resources

Count of NPU-occupied Pods

| Monitoring items | Description |

|---|---|

| Count of NPU-occupied Pods | Count of NPU-occupied Pods on the current node |

GPU card allocation

| Monitoring items | Description |

|---|---|

| Total GPU cards | Total NPU cards in the current node |

| Allocation count | Allocated NPU cards in the current node |

| Card allocation rate | Allocation rate = allocated NPU cards / total NPU cards |

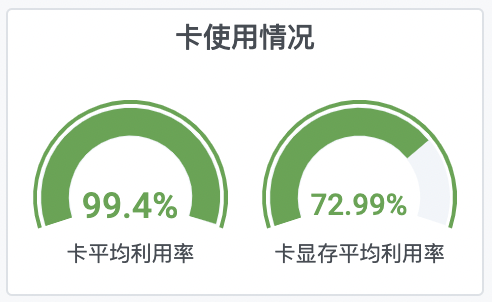

GPU card usage

| Monitoring items | Description |

|---|---|

| Average GPU card utilization rate | Real-time average utilization rate of all NPU cards in the current node, average utilization rate of NPU cards = sum (utilization rate of all NPU cards) / total GPU cards) |

| Average memory utilization rate of GPU card | Real-time average memory utilization rate of all NPU cards in the current node, average memory utilization rate = sum (memory utilization rate of all NPU cards) / total NPU cards) |

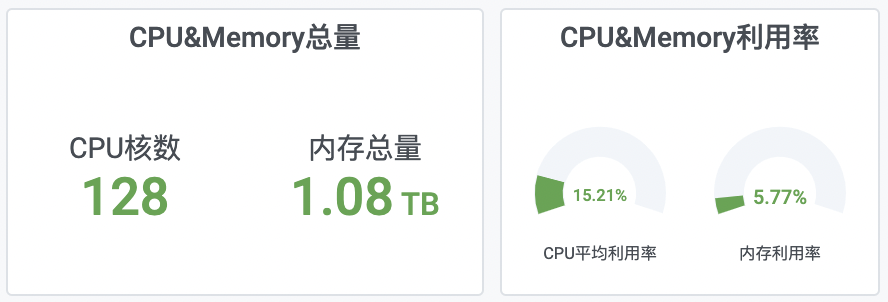

Total CPU & Memory / utilization rate

| Monitoring items | Description |

|---|---|

| CPU core count | Total CPU core count in the current node |

| Average CPU utilization rate | Real-time average utilization rate of all CPUs in the current node |

| Total memory | Total memory of the current node |

| Memory utilization rate | Real-time average utilization rate of all memory in the current node |

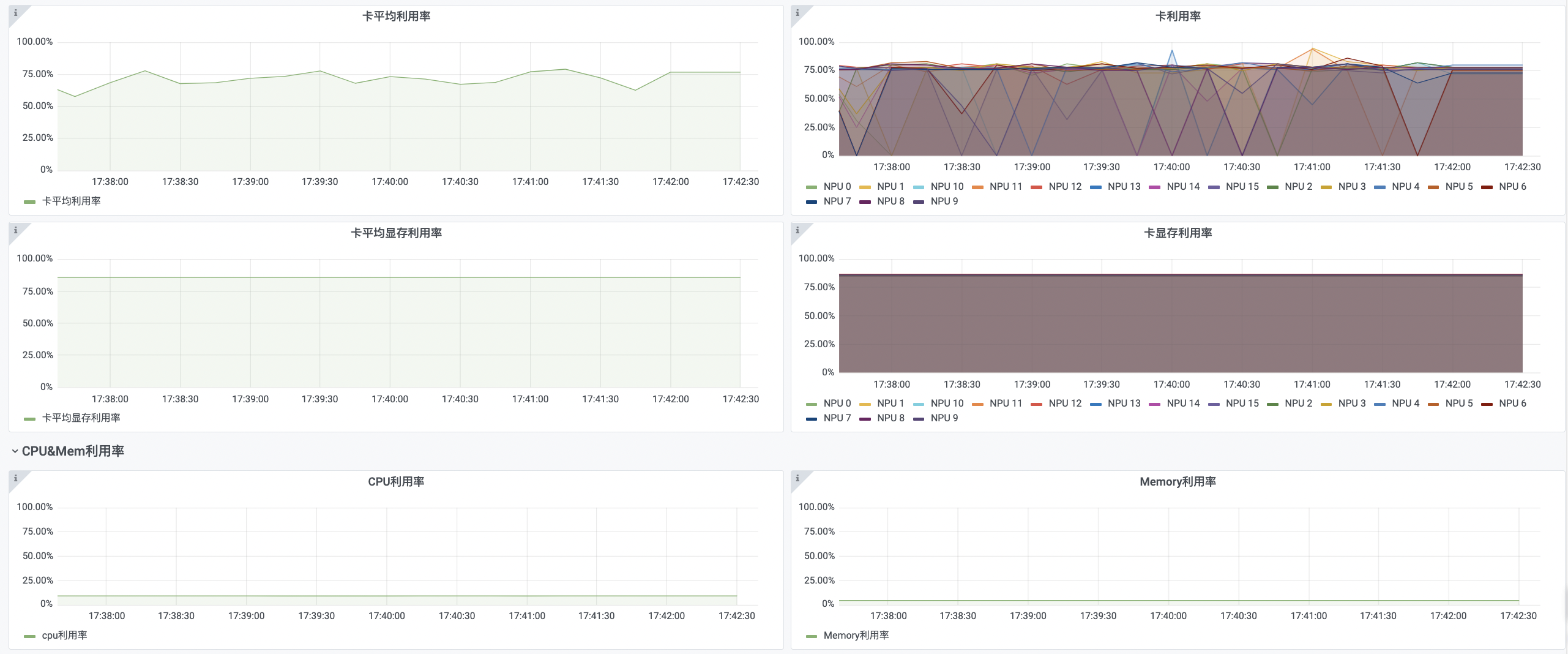

Utilization rate

| Monitoring items | Description |

|---|---|

| Average GPU card utilization rate | Real-time average utilization rate of all NPU cards in the current node, average utilization rate of NPU cards = sum (utilization rate of all NPU cards) / total GPU cards) |

| GPU card utilization rate | Real-time utilization rate of all NPU cards in the current node |

| Average memory utilization rate of GPU card | Real-time average memory utilization rate of all NPU cards in the current node, average memory utilization rate = sum (memory utilization rate of all NPU cards) / total NPU cards) |

| GPU card memory utilization rate | Real-time memory utilization rate of all NPU cards in the current node |

| CPU utilization rate | Real-time utilization rate of all CPU in the current node |

| Memory utilization rate | Real-time utilization rate of all memory in the current node |

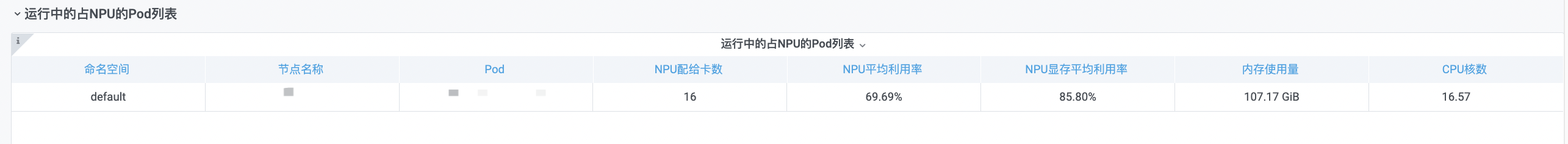

List of running NPU-occupied Pods

| Monitoring items | Description |

|---|---|

| Namespace | NPU-occupied Pods namespace running on the current node |

| Node name | Name of the node where the NPU-occupied pod is running |

| Pod | Name of the NPU-occupied Pods running on the current node |

| Name of workload | Name of the NPU-occupied Pods workload running on the current node |

| Allocated NPU cards | NPU cards allocated to NPU-occupied Pods running on the current node |

| Average NPU utilization rate | Real-time average utilization rate of NPU card in NPU-occupied Pods running on the current node |

| Average NPU memory utilization rate | Real-time average memory utilization rate of NPU card of NPU-occupied Pods running on the current node |

| Memory usage | Memory usage of NPU-occupied Pods running on the current node |

| CPU core count | CPU core count of NPU-occupied Pods running on the current node |