Custom NodeGroup Kubelet Configuration

When the default kubelet parameter settings fail to meet business needs and cluster node personalization is necessary, you can configure kubelet parameters at the node group level to modify node behavior. For instance, you might adjust cluster resource reservations to control resource usage or customize node pressure eviction thresholds to mitigate resource shortages. CCE enables node kubelet parameter configurations through the console. This document details the configuration process.

Prerequisites

- Successfully create CCE cluster.

- The node group has been successfully created.

Usage restrictions

- Custom kubelet parameter settings are tied to the cluster version. Refer to the list of supported kubelet parameters.

- Custom-configured kubelet parameters remain consistent after cluster expansion.

Note

- Node configurations for custom kubelet parameters are updated in batches, taking immediate effect for existing nodes in the node group. New nodes will also adopt the updated configuration. When the change takes effect, the kubelet process will restart, which might affect node and workload operations. It is recommended to perform these actions during off-peak business hours.

- If evictionHard, kubeReserved, or systemReserved are not configured, the system will use default values for resource reservation. Refer to CCE Node Resource Reservation Policy.

- Modifying the resource reservation configuration might decrease the allocatable resources on a node. Nodes with higher resource usage may trigger eviction as a result.

- Node group operations are not supported while kubelet parameters are being updated.

Supported kubelet parameters

The following custom Kubelet parameters are supported, as detailed in the table below.

Note

Changing Kubelet parameters such as topologyManagerPolicy, topologyManagerScope, and QoSResourceManager in featureGates will prompt a restart of Kubelet. This recalculates the resource allocation for container instances, possibly causing running containers to restart or resulting in resource allocation failures. Proceed with caution. As Kubernetes versions advance, certain parameters or featureGates might become deprecated or removed from the codebase. If custom parameters managed by the Kubernetes container service are no longer compatible with the new version, the corresponding configurations will be deprecated and removed during the cluster upgrade process.

| Field | Description | Default value | Recommemded value range |

|---|---|---|---|

| kubeReserved | Resource configurations reserved by the Kubernetes system. | Automatically adjusted based on node specifications. | For details, refer to CCE Resource Reservation Description. |

| systemReserved | Resource configurations reserved by the system. | Automatically configure based on node specifications | For details, refer to CCE Resource Reservation Description. |

| allowedUnsafeSysctls | Configure allowed unsafe sysctls or sysctl patterns with wildcards (wildcard patterns ending with *). Use a string sequence separated by English half-width commas (,). |

Not applicable | Support sysctl configurations with the following prefixes: |

| containerLogMaxFiles | The maximum number of log files in a container. This value must be at least 2, and the container runtime must be containerd. | 5 | 2~10 |

| containerLogMaxSize | The maximum threshold for container log file size before generating alternate new files. The container runtime must be containerd. | 10Mi | Not applicable |

| cpuCFSQuota | Allow the imposing of CPU CFS quota constraints for containers with CPU limits. | true | Optional values |

| cpuCFSQuotaPeriod | Set the CPU CFS quota period value. Ensure the CustomCPUCFSQuotaPeriod feature gate is enabled. |

100ms | Range between 1 millisecond and 1 second (inclusive). |

| cpuManagerPolicy | Define the CPU manager policy for nodes. | none | Optional values |

| eventBurst | The maximum allowed burst count for event records. This represents the temporarily permissible event record count under the condition that the event-qps threshold is met. Used only when event-qps is greater than 0. | 50 | 1~100 |

| eventRecordQPS | The number of events that can be generated per second. | 50 | 1~50 |

| evictionHard | A set of hard thresholds that initiate pod eviction actions. | "imagefs.available": "15%", "memory.available": "100Mi", "nodefs.inodesFree": "5%", "nodefs.available": "7%" | Not applicable |

| evictionSoft | Configure a set of eviction thresholds. | None | Not applicable |

| evictionSoftGracePeriod | Define a set of eviction grace periods. |

"5m" | Not applicable |

| featureGates | Experimental feature toggle group. Each switch is represented in key=value form. For more information, see Feature Gates. |

Not applicable | Not applicable |

| ImageGCHighThresholdPercent | Set the disk usage percentage threshold for images. When image usage exceeds this threshold, image garbage collection will continuously run. The percentage is calculated by dividing the specified value by 100, making this field valid between 0 and 100 (inclusive). This value must also be greater than the imageGCLowThresholdPercent during configuration. |

85 | 60~95 |

| ImageGCLowThresholdPercent | Set the disk usage percentage threshold for images. When image usage falls below this threshold, image garbage collection will not be triggered. This value also acts as the minimum disk usage boundary for garbage collection. The percentage is determined by dividing the specified value by 100, making this field valid between 0 and 100 (inclusive). Ensure that this value is lower than the imageGCHighThresholdPercent during configuration. |

80 | 30~90 |

| kubeAPIBurst | The maximum number of burst requests that can be sent to the APIServer per second. | 500 | 1~500 |

| kubeAPIQPS | The number of queries sent to the APIServer per second. | 500 | 1~500 |

| maxPods | The maximum number of pods that can run on a kubelet. | 128 | Not applicable; it depends on factors like machine specifications and container network configurations. |

| memoryManagerPolicy | The strategy employed by the memory manager. |

None | Optional values:

Before using this strategy, you should complete the following operations: |

| podPidsLimit | The maximum number of processes allowed per pod. | -1 | The value varies based on user requirements. The default value is recommended, where -1 signifies no limit. |

| readOnlyPort | Unauthenticated read-only port for kubelet. The service on this port does not support identity certification or authorization. This port number must be between 1 and 65535 (inclusive). Setting this field to 0 disables the read-only service. Note:Risks of exposing the read-only port of kubelet container monitor (10255). |

0 | 0 |

| registryPullQPS | The QPS limit for the image registry. If --registry-qps is set to a value greater than 0, it restricts the QPS limit for the image registry. If set to 0, it means there is no limit. | 5 | 1~50 |

| registryBurst | The maximum burst image pull count. Allows pulling images up to the specified count temporarily, as long as it does not exceed the set --registry-qps value (default is 10). Applicable only if --registry-qps is greater than 0. | 10 | 1~100 |

| reservedMemory | The list of reserved memory for NUMA nodes. |

Not applicable | Not applicable |

| resolvConf | The DNS resolution configuration file used within containers. |

/etc/resolv.conf | Not applicable |

| runtimerequesttimeout | Specifies the timeout duration for all runtime requests, excluding long-running ones like pull, logs, exec, and attach. | 120s | Not applicable |

| serializeImagePulls | Serial image pull. It is recommended to retain the default values for nodes running Docker daemon versions below 1.9 or those using the Aufs storage backend. | true | Optional values: |

| topologyManagerPolicy | Topology manager policy. With the NUMA architecture, data can be allocated to the same NUMA node, to reduce cross-node access and improve system performance. The topology manager can make resource allocation decisions for the topology structure. For more information, see Topology Management Policy on Control Nodes. | none | Optional values |

| topologyManagerScope | The scope of the topology manager: topology prompts are generated by prompt providers and delivered to the topology manager. | container | Optional values |

Customize node group kubelet parameters via the console

When custom kubelet parameters take effect, the kubelet process will reboot, which may impact business operations. Please perform this operation during off-peak business hours.

- Sign in to Cloud Container Engine Console (CCE).

- Select Cluster List in the left navigation bar.

- Click the target cluster in the Cluster List page to access the cluster management page.

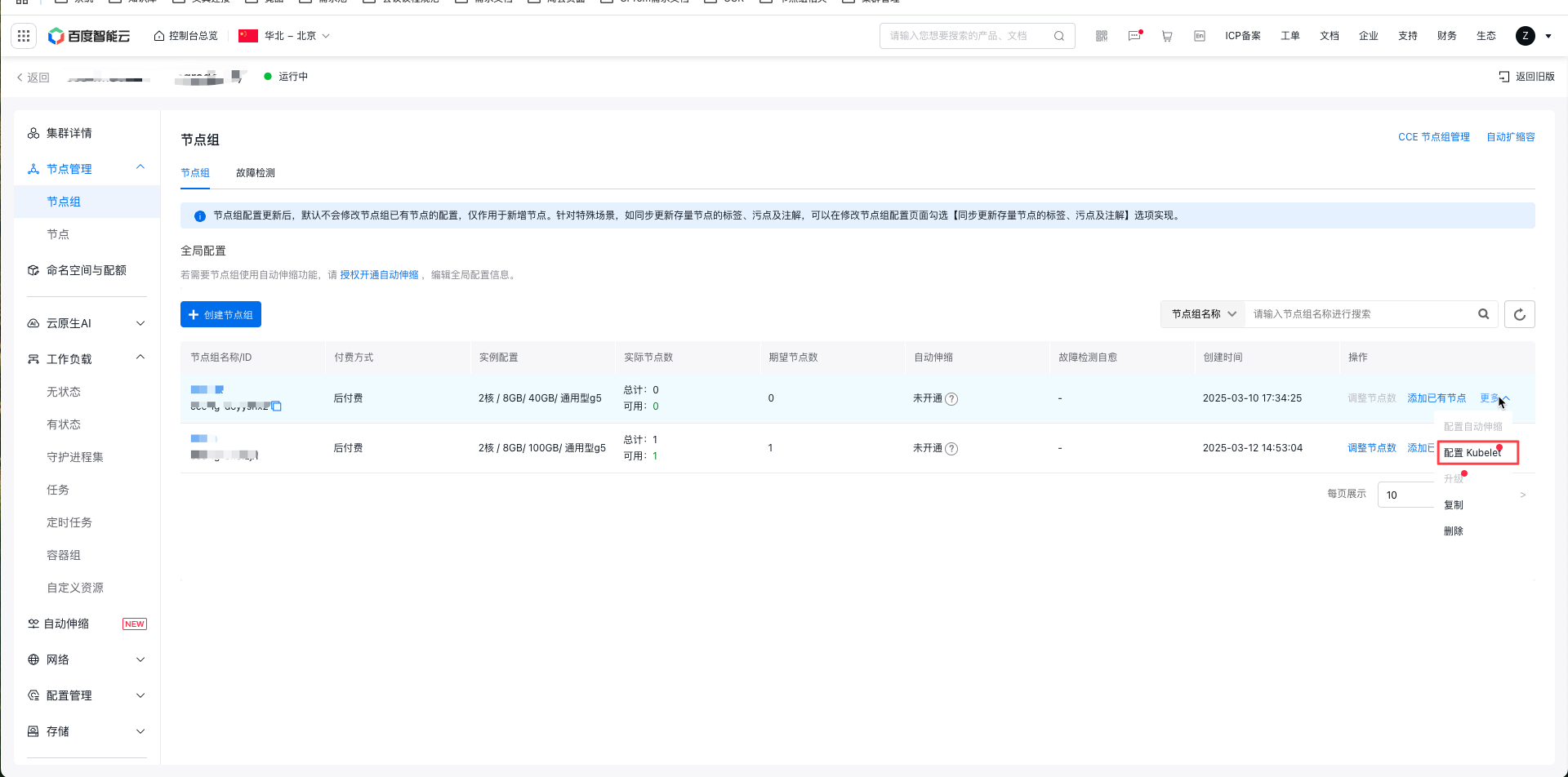

- Select Node Management > Node Group from the left navigation bar in the Cluster Management page.

- On the Node Group List page, click More > {-1-Configure Kubelet

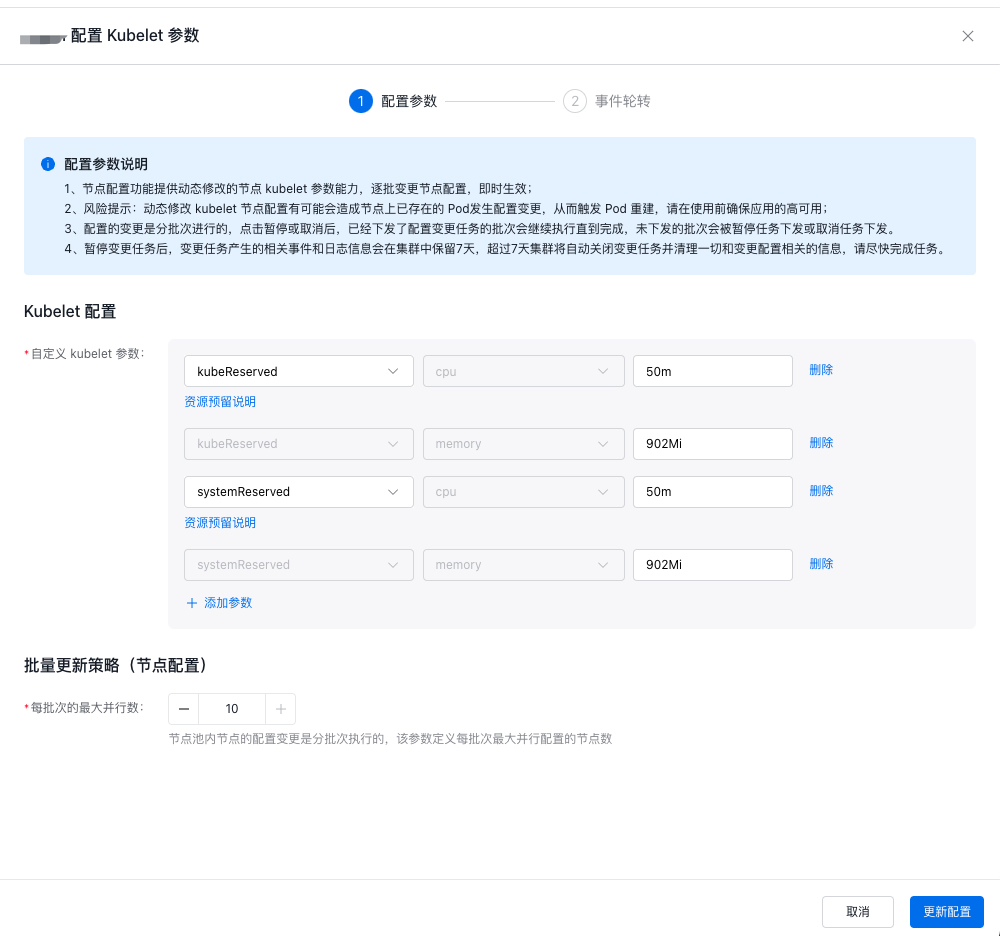

under the target node group's operation column - Carefully read the notes on the current page, click Custom Parameters to select the parameters to configure, set the maximum parallel count per batch, and then click Submit and follow the page instructions to complete the operation.

After configuring the Maximum Parallel Count per Batch (recommended setting: 10), the kubelet configuration will take effect on nodes in batches. Execution takes some time. You can view the progress in the event list area and control the execution process, such as suspending, proceeding, or canceling.

You can use the suspend function to validate dilated nodes. When the execution is suspended, nodes currently being configured will continue to complete execution; nodes not yet executed will not undergo custom configuration until the task proceeds.

Note

It is advised to complete custom configuration tasks promptly. Tasks left in a suspended state will be automatically canceled after 7 days, and all related events and log data will be cleared.