GPU resource pool overview

Updated at:2025-10-27

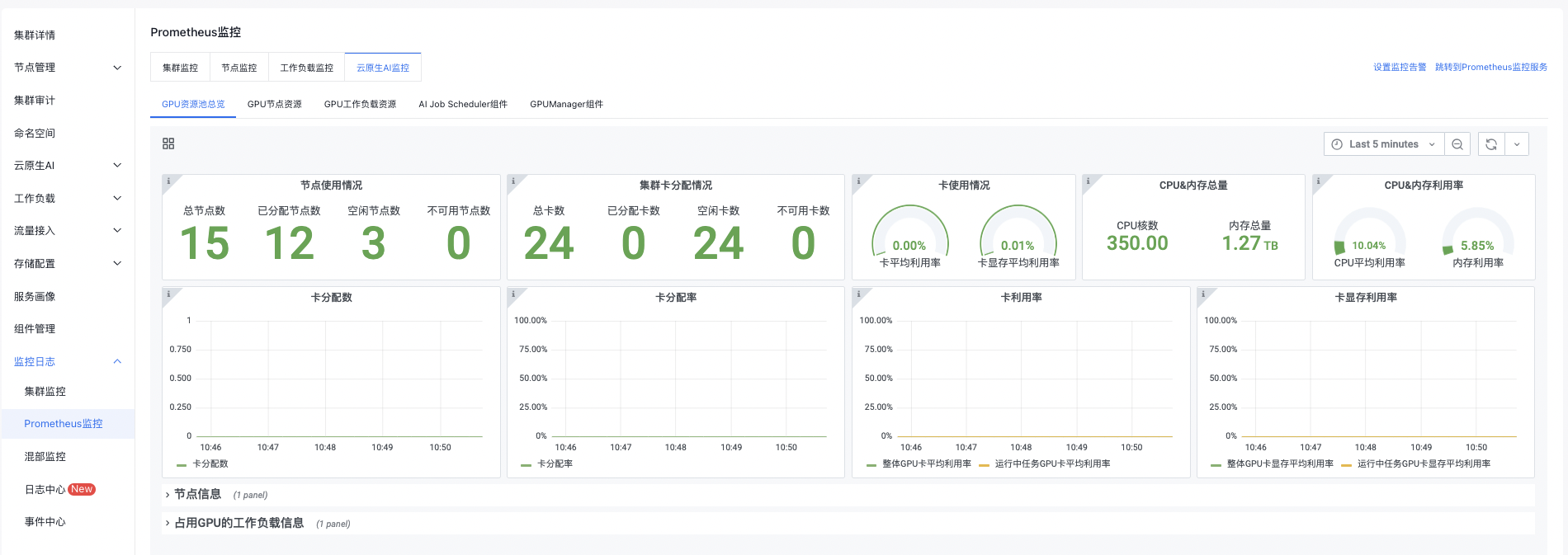

An overview of the GPU resource pool includes node usage, cluster GPU card allocation, GPU card usage, CPU and memory utilization, allocated GPU cards, GPU card allocation rate, GPU card utilization rate, GPU card memory usage rate, node details, and workloads using GPU resources.

Prerequisites

- The CCE AI Job Scheduler component has been installed and its version is ≥ 1.7.9

- The CCE GPU Manager component has been installed

- Accessed monitoring instances

- Collection tasks need to be enabled. For details, refer to the document: Access Monitoring Instance and Enable Collection Tasks

Application method

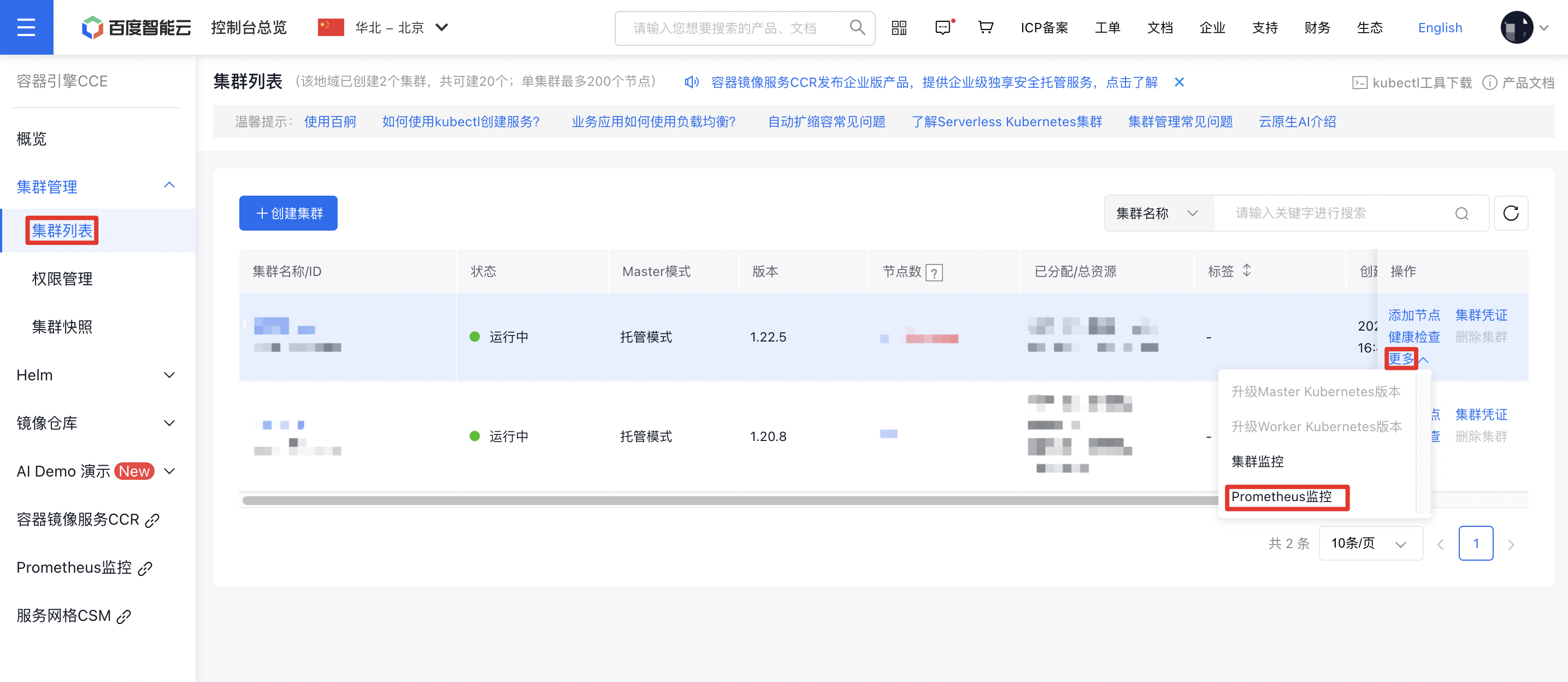

- Sign in to Cloud Container Engine Console (CCE).

- Click Cluster Management on the left sidebar. In the Cluster List, select the Cluster Name you need. Under Actions - More on the right, click Prometheus Monitoring to navigate to the Prometheus Monitoring Service.

- In the options at the bottom of the Prometheus Monitoring Page, select Cloud-Native AI Monitoring, then select GPU Resource Pool Overview.

GPU resource pool overview is shown below:

You can click the button in the upper right corner to set monitoring time, manual refresh, and automatic refresh by yourself.

Detailed explanation of GPU resource pool overview

Node usage

| Monitoring items | Description |

|---|---|

| Total node count | All nodes in cluster |

| Allocated node count | Nodes with 0 available GPU cards |

| Idle node count | Nodes with GPU card count > 0, including tainted nodes |

| Unavailable node count | Cordoned or not ready nodes |

Cluster card allocation

| Monitoring items | Description |

|---|---|

| Total GPU cards | GPU cards across all nodes in the cluster |

| Allocated cards | Allocated and in-use GPU cards |

| Idle GPU cards | Nodes with GPU card count > 0, including idle GPU cards of tainted nodes |

| Unavailable GPU cards | Idle GPU cards across nodes in the cluster |

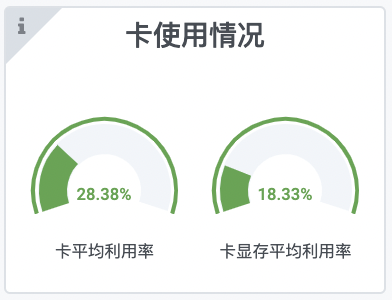

GPU card usage

| Monitoring items | Description |

|---|---|

| Average GPU card utilization rate | Real-time average utilization rate of GPU cards across all nodes in the current cluster, average utilization rate of GPU cards = sum (utilization rate of GPU cards across all nodes) / GPU cards across all nodes) |

| Average memory utilization rate of GPU card | Real-time average memory utilization rate of GPU cards across all nodes in the current cluster, average memory utilization rate = sum (memory utilization rate of GPU cards across all nodes) / GPU cards across all nodes) |

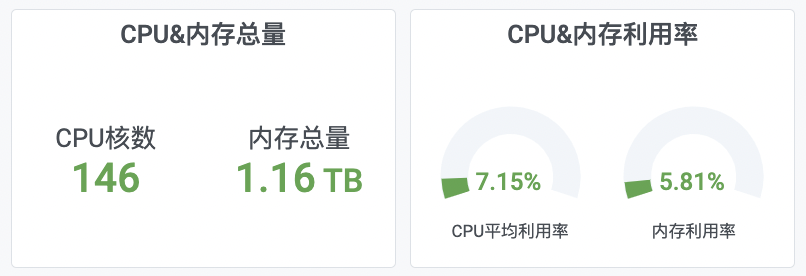

CPU & memory

| Monitoring items | Description |

|---|---|

| CPU core count | Total CPU core count in the current cluster |

| Average CPU utilization rate | Real-time average utilization rate of all CPUs in the current cluster |

| Total memory | Total memory of the current cluster |

| Average memory utilization rate | Real-time average utilization rate of all memory in the current cluster |

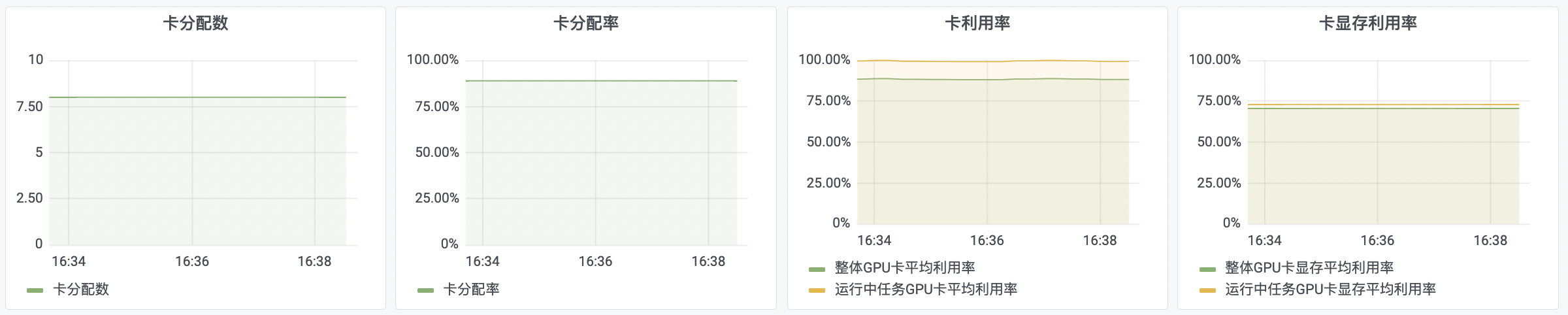

Utilization rate & allocation rate

| Monitoring items | Description |

|---|---|

| Allocated GPU cards | Allocated GPU cards |

| Card allocation rate | Allocation rate = allocated GPU cards / total GPU cards |

| Overall average GPU utilization rate | Real-time average utilization rate of GPU cards across all nodes in the current cluster, average utilization rate = sum (utilization rate of GPU cards across all nodes) / GPU cards across all nodes) |

| Average GPU utilization rate of running tasks | Average utilization rate of GPU cards = sum(utilization rate of allocated GPU cards) / allocated GPU cards |

| Average overall GPU memory utilization rate | Real-time average memory utilization rate of GPU cards across all nodes in the current cluster, average memory utilization rate = sum (memory utilization rate of GPU cards across all nodes) / GPU cards across all nodes) |

| Average GPU memory utilization rate of running tasks | Average utilization rate of GPU memory = sum(memory utilization rate of allocated GPU cards) / GPU cards allocated |

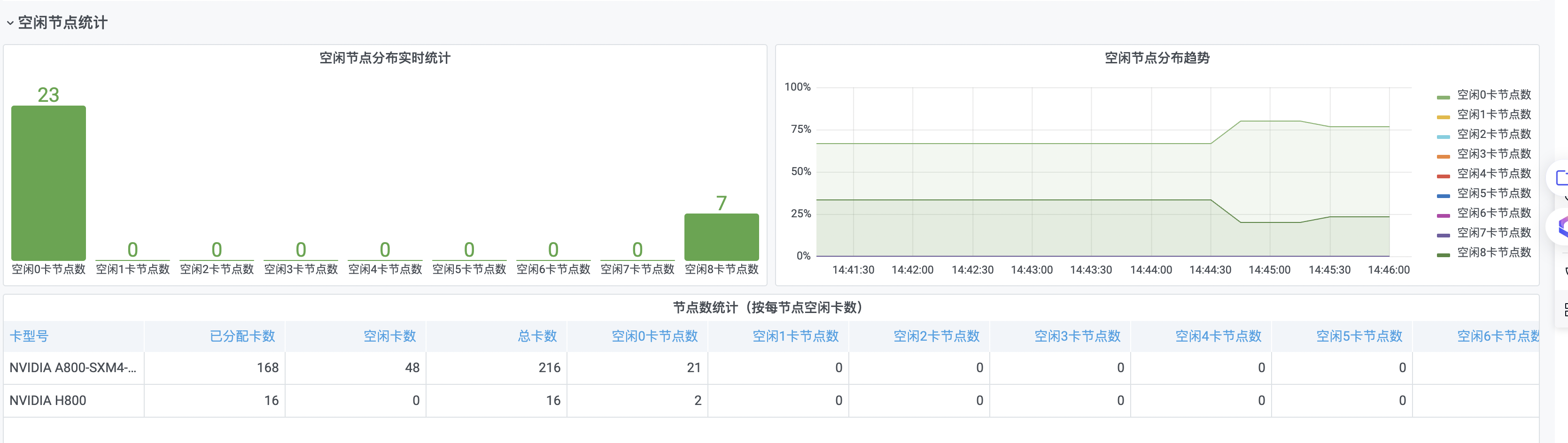

Idle node statistics

| Monitoring items | Description |

|---|---|

| Real-time statistics of idle node distribution | Distribution of the number of idle nodes in the current cluster |

| Trend of idle node distribution | Historical trends of distribution of the number of idle nodes in the current cluster |

| Card model | Existing GPU card models in the current cluster |

| Allocated cards | Number of GPU cards of this model that have been allocated and used in the cluster |

| Idle GPU cards | Number of idle and unallocated GPU cards of this model in the cluster |

| Total GPU cards | Total number of GPU cards of this model in the cluster |

| Count idle x card nodes | Number of nodes with idle x cards per node in the cluster |

Node information

| Monitoring items | Description |

|---|---|

| Node name | Names of nodes in the current cluster |

| Count of allocated cards | GPU cards allocated on nodes in the current cluster |

| Count of GPU-Pods | Count of GPU-occupied Pods on the current node |

| CPU utilization rate | Real-time average utilization rate of all CPUs in the current node |

| Memory utilization rate | Real-time average utilization rate of all memory in the current node |

| Node status | Current node status |

| CPU core count | Total CPU core count in the current node |

| Total memory | Total memory of the current node |

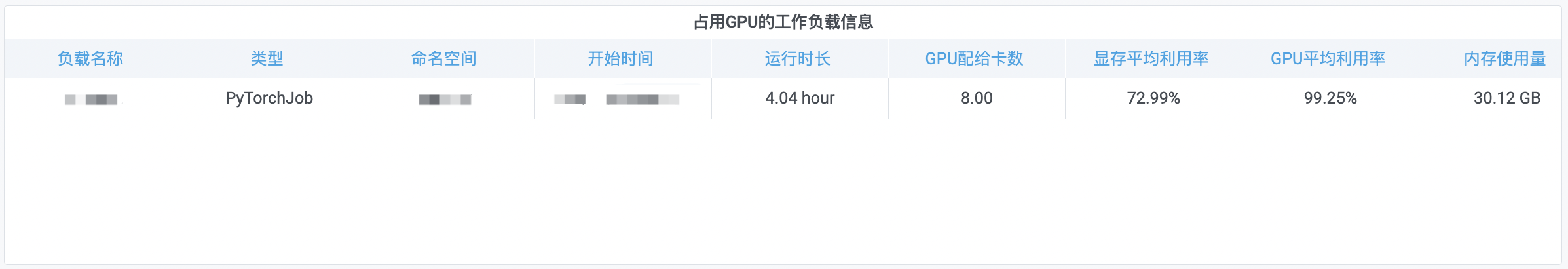

GPU-occupied workload information

| Monitoring items | Description |

|---|---|

| Name of workload | Name of GPU-occupied workloads in the current cluster |

| Type | Type of GPU-occupied workloads in the current cluster |

| Namespace | Namespace of GPU-occupied workloads in the current cluster |

| Start time | Start time of GPU-occupied workloads in the current cluster |

| Runtime | Runtime of GPU-occupied workloads in the current cluster |

| Allocated GPU cards | Count of GPU cards allocated to GPU-occupied workloads in the current cluster |

| Average memory utilization rate | Real-time average memory utilization rate of all GPU cards of GPU-occupied workloads in current cluster |

| Average GPU utilization rate | Real-time average utilization rate of GPU cards in GPU-occupied workloads in current cluster |

| Memory usage | Memory usage of GPU-occupied workloads in the current cluster |

| CPU core count | CPU core count of GPU-occupied workloads in the current cluster |