DataX Reading and Writing BOS

Updated at:2025-11-03

DataX

DataX is an offline tool for heterogeneous data source synchronization, designed to deliver stable and high-performance data transfer across various systems, including relational databases (such as MySQL, Oracle), HDFS, Hive, ODPS, HBase, FTP, and more.

Configuration

- Download and unzip DataX;

- Download and unzip BOS-HDFS, and copy the jar package to Datax’s unzip path: plugin/reader/hdfsreader/libs/ and plugin/writer/hdfswriter/libs/ directories;

- Open the bin/datax.py script in the DataX unzip directory and modify the CLASS_PATH variable in the script as follows:

Bash

1CLASS_PATH = ("%s/lib/*:%s/plugin/reader/hdfsreader/libs/*:%s/plugin/writer/hdfswriter/libs/*:.") % (DATAX_HOME, DATAX_HOME, DATAX_HOME)Start

Example

Read the test file from {your bucket} and transfer it to {your other bucket}.

testfile:

Bash

11 hello

22 bos

33 worldbos2bos.json:

JSON

1{

2 "job": {

3 "setting": {

4 "speed": {

5 "channel": 1

6 },

7 "errorLimit": {

8 "record": 0,

9 "percentage": 0.02

10 }

11 },

12 "content": [{

13 "reader": {

14 "name": "hdfsreader",

15 "parameter": {

16 "path": "/testfile",

17 "defaultFS": "bos://{your bucket}/",

18 "column": [

19 {

20 "index": 0,

21 "type": "long"

22 },

23 {

24 "index": 1,

25 "type": "string"

26 }

27 ],

28 "fileType": "text",

29 "encoding": "UTF-8",

30 "hadoopConfig": {

31 "fs.bos.endpoint": "bj.bcebos.com",

32 "fs.bos.impl": "org.apache.hadoop.fs.bos.BaiduBosFileSystem",

33 "fs.bos.access.key": "{your ak}",

34 "fs.bos.secret.access.key": "{your sk}"

35 },

36 "fieldDelimiter": " "

37 }

38 },

39 "writer": {

40 "name": "hdfswriter",

41 "parameter": {

42 "path": "/testtmp",

43 "fileName": "testfile.new",

44 "defaultFS": "bos://{your other bucket}/",

45 "column": [{

46 "name": "col1",

47 "type": "string"

48 },

49 {

50 "name": "col2",

51 "type": "string"

52 }

53 ],

54 "fileType": "text",

55 "encoding": "UTF-8",

56 "hadoopConfig": {

57 "fs.defaultFS": ""

58 "fs.bos.endpoint": "bj.bcebos.com",

59 "fs.bos.impl": "org.apache.hadoop.fs.bos.BaiduBosFileSystem",

60 "fs.bos.access.key": "{your ak}",

61 "fs.bos.secret.access.key": "{your sk}"

62 },

63 "fieldDelimiter": " ",

64 "writeMode": "append"

65 }

66 }

67 }]

68 }

69}Replace {your bucket}, endpoint, {your sk}, etc., in the configuration as needed;

Support configuring either the reader or the writer for BOS.

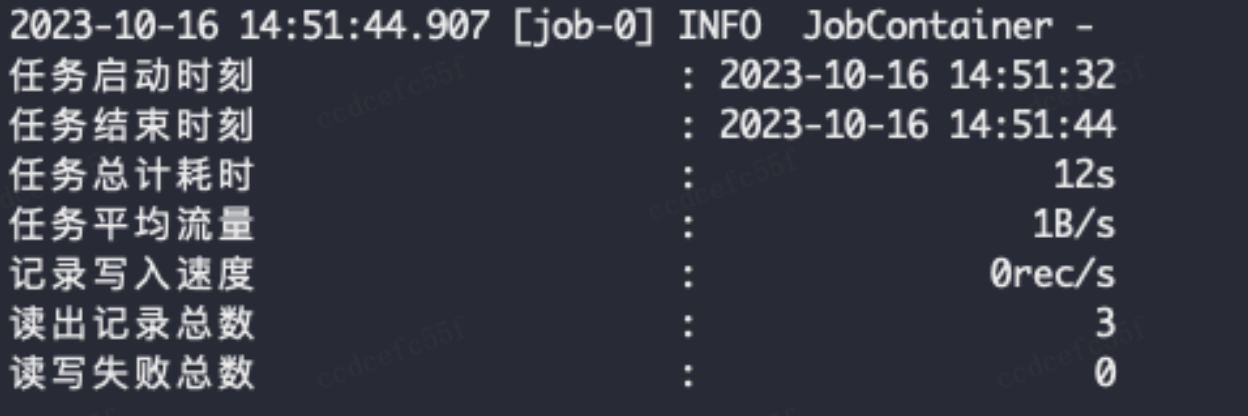

Result

Python

1python bin/datax.py bos2bos.jsonReturn upon successful execution: