Sqoop Application Documentations

Scenarios Description

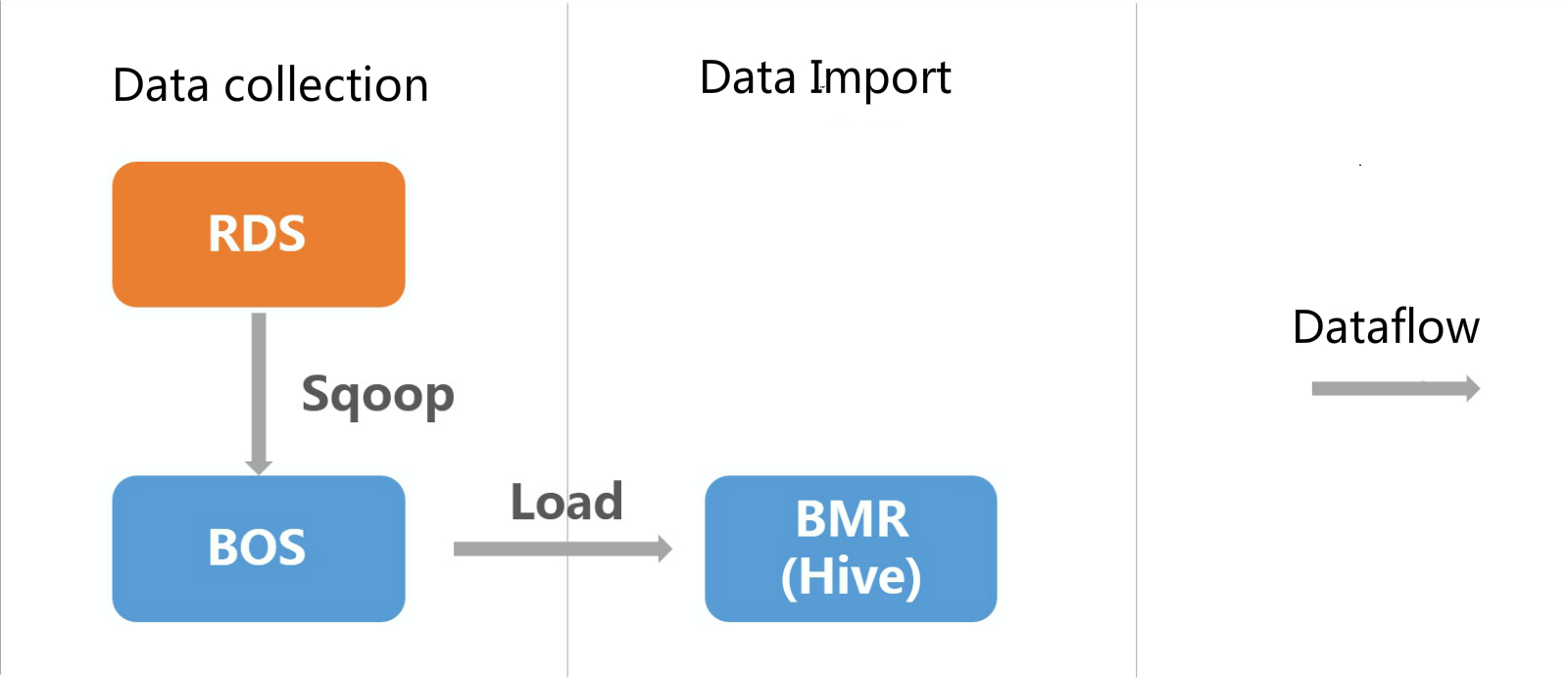

BMR Sqoop is used to import data on RDS to BMR Hive:

In this example, the hive data table's location is the BOS path, and partition is dt (string). Data imported every day are distinguished according to dt specified dates.

Note

The hive data table's location is BOS, so sqoop cannot be directly used to import RDS data to the hive. When loading data, hive writes data into local hdfs and then transfers data directory to the hive table's location. Local hdfs and BOS data are two different file systems so that direct transfer can trigger exceptions. Hence, this scenario needs two steps: data import to BOS and data import to the hive.

Preparation Phase

The data in this example is public datasets of BMR Samples.

Step 1 Prepare Data

The sql file (Download Address) can be used for table creation and data of this example.

Step 2 Create Tables and Import Data

- For how to log in to RDS database, please see Documentation.

- After logging in to RDS, you can select a database to import the downloaded sql file and create a bmr_public_data_logs data table to import the public datasets.

Steps are as follows:

- Select database sqoop;

- Click to import;

- Select the downloaded sql file. Please be noted that the size of your sql file cannot exceed 8M. If the size exceeds 8M, you can try zip file compression, and the compressed file end is .sql.zip.

Step 3 Create Hive Data Tables

Log in to the BMR cluster, switch to hdfs user, and create a hive data table.

# obtain eip from instance list in BMR Console cluster details page

ssh root@eip

# switch to hdfs user

su hdfs

cd

# enable hive shell

hive

# enter the following table creation statement

CREATE EXTERNAL TABLE `bmr_public_data_logs`(

`id` int,

`remote_addr` string,

`time_local` string,

`request` string,

`status` int,

`body_bytes_send` int,

`http_referer` string,

`http_cookie` string,

`remote_user` string,

`http_user_agent` string,

`request_time` double,

`host` string,

`msec` double)

PARTITIONED BY (

`dt` string)

ROW FORMAT DELIMITED FIELDS TERMINATED BY ','

STORED AS INPUTFORMAT

'org.apache.hadoop.mapred.TextInputFormat'

OUTPUTFORMAT

'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION

'bos://test-sqoop-example/bmr-public-data-logs/';Notes

- The default field separator for sqoop import is ",", so you need to set "," as field separation of hive table when creating it, which means in the table creation statement: ROW FORMAT DELIMITED FIELDS TERMINATED BY ','

- Modify the location's bos path to be your bos path

Import Data to BOS

You can use Sqoop to import data on RDS to BOS. For more usages of Sqoop, please see Documentation.

# obtain eip from instance list in BMR Console cluster details page

ssh root@eip

# switch to hdfs user

su hdfs

cd

# use sqoop command to import data to bos

sqoop import --connect jdbc//rds_mysql_hostname:port/sqoop --username test --password test --table bmr_public_data_logs --split-by id --target-dir bos://test-sqoop-example/bmr-log-testRemarks:

Sqoop command needs replacement as follows:

(1) RDS domain name;

(2) RDS database;

(3) RDS database user name;

(4) RDS database password;

(5) Target path on BOS (not exist before sqoop import);

Import Data to Hive

Use hive to load data.

# obtain eip from instance list in BMR Console cluster details page

ssh root@eip

# switch to hdfs user

su hdfs

cd

# enable hive shell

hive

# import data

load data inpath 'bos://test-sqoop-example/bmr-log-test' into table bmr_public_data_logs partition (dt='2015-11-02');Notes:

bos://test-sqoop-example/bmr-log-testis target path used by sqoop to import data to bos.- During data loading, hive transfers data imported to bos by sqoop to the location specified by the hive table, creates directory "dt=2015-11-02" in the location (

bos://test-sqoop-example/bmr-public-data-logs/), and transfers data to such directory. The original directory imported to bos by sqoop becomes null and can be deleted. - During data loading, overwrite can exist, for example, "load data inpath 'bos://test-sqoop-example/bmr-log-test' overwrite into table bmr_public_data_logs partition (dt='2015-11-02');" is used to overwrite original partition; in case of no overwrite, files of same name are renamed, and repeated data exists in hive table if data file has repeated data.