Import Data

Data Import by Sqoop

Before using BMR to add jobs, you need to upload the data for analysis to BOS. For more information, please see BOS Uploads Object.

You can use Sqoop to import data in RDS to BOS, HDFS, HBase, or Hive. The specific operation jobs are as follows:

Import Data from Relational Database Service (RDS) to BOS

- Use SSH to connect to the master node. For more information, please see SSH Connection to Cluster.

- Enter the command: su hdfs. Switch to HDFS user.

-

execute the command in the following format: sqoop import --connect jdbc:mysql://address:port/db_name --table table_name --username XX --password XX --target-dir XX

Example: sqoop import --connect jdbc:mysql://mysql56.rdsmiy5q77jfaqp.rds.bj.baidubce.com:3306/sqoop --table test --username sqoop --password sqoop_123 --target-dir bos://abc/sqooptest

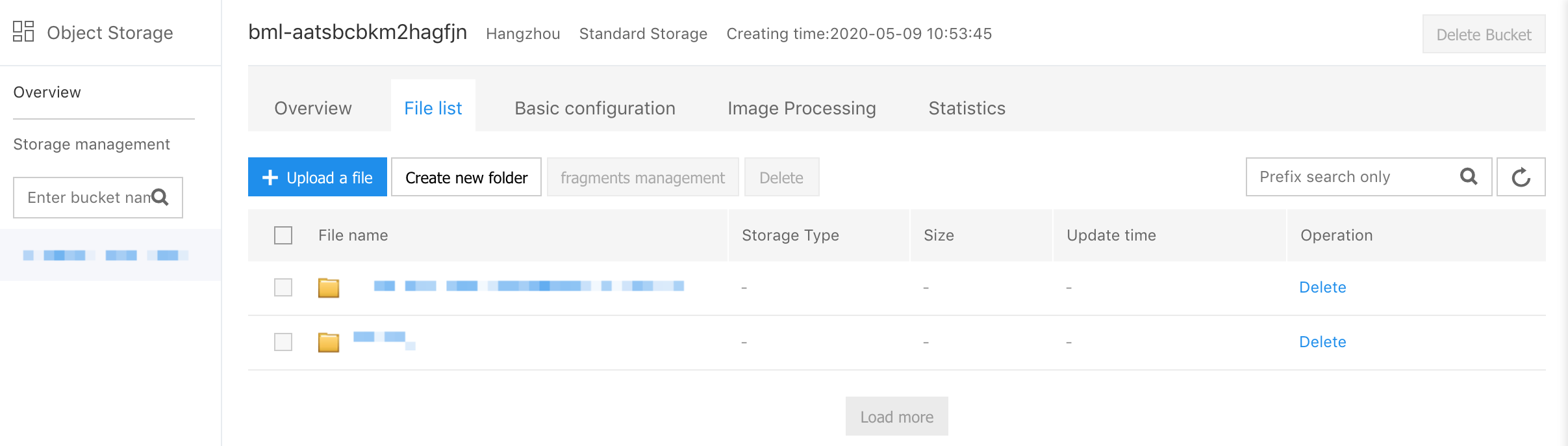

- You can view the execution outcome in BOS. Example of viewing execution outcome in BOS:

Import Data from Relational Database Service (RDS) to HDFS

- Execute steps 1 to 2 in Import Data from Relational Database Service (RDS) to BOS.

-

execute the command in the following format:

sqoop import --connect jdbc:mysql://address:port/db_name --table table_name --username XX --password XX --target-dir XX

Example: sqoop import --connect jdbc:mysql://mysql56.rdsmiy5q77jfaqp.rds.bj.baidubce.com:3306/sqoop --table test --username sqoop --password sqoop_123 --target-dir /user/hdfs/sqooptest

- You can view the execution outcome in HDFS.

| Parameters | Parameter Description |

|---|---|

| address and port | Address and port number of RDS instance. Obtain it from the basic information of the RDS instance. For more information, please see Connect RDS Instance |

| db_name | Name of database which contains data to import. For how to create Relational Database Service (RDS) instance, please see Create database. |

| table_name | Name of the data table, which contains data to import. If you need to create a data table, please log in to the RDS instance. For more information, please see Connect RDS Instance. |

| --username and --password | Account, and password of database which contains data to import. Obtain information from the RDS instance. For more information, please see Manage Database Accounts. |

| --target-dir | Target address of data import, which is the path of BOS or HDFS. Note: If the BOS path is specified, the directory in such a path cannot exist on the bos. For example, the sqooptest directory in import path bos://test/sqooptest must not exist on the bos. |

Import Data from Relational Database Service (RDS) to HBase

- Use SSH to connect to the master node. For more information, please see SSH Connection to Cluster.

- Enter the command: su hdfs. Switch to HDFS user.

-

Two types of data import to HBase by Sqoop:

- If you import the data to the table where HBase exists, execute the command in the following format: sqoop import --connect jdbc:mysql://address:port/db_name --table table_name --username XX --password XX --hbase-table hbase_table_name --column-family hbase_column_family --hbase-row-key row_key. Example: sqoop import --connect jdbc:mysql://mysql56.rdsmiy5q77jfaqp.rds.bj.baidubce.com:3306/sqoop --table test --username sqoop --password sqoop_123 --hbase-table sqoop_hbase -- column-family message1 --habse-row-key id.

- If you import the data to the table where Hbase does not exist, execute the command in the following format: sqoop import --connect jdbc:mysql://address:port/db_name --table table_name --username XX --password XX --hbase-table hbase_table_name --column-family hbase_column_family --hbase-row-key row_key --hbase-create-table. Example: sqoop import --connect jdbc:mysql://mysql56.rdsmiy5q77jfaqp.rds.bj.baidubce.com:3306/sqoop --table test --username sqoop --password sqoop_123 --hbase-table sqoop_hbase -- column-family message1 --habse-row-key id --hbase-create-table.

-

You can view execution outcome in HBase. The example of execution outcome is as follows:

hbase(main):003:0>scan "sqoop_hbase" ROW COLUMN+CELL 1 column=message1:address,timestamp=1439794756361,value=shanghai 1 column=message1:favor,timestamp=1439794756361,value=lanqiu 1 column=message1:name,timestamp=1439794756361,value=xiaoming 1 column=message1:sex,timestamp=1439794756361,value=1 1 column=message1:address,timestamp=1439794756361,value=beijing 1 column=message1:favor,timestamp=1439794756361,value=pingpong 1 column=message1:name,timestamp=1439794756361,value=xiaohong 1 column=message1:sex,timestamp=1439794756361,value=2

| Parameters | Parameter Description |

|---|---|

| address and port | Address and port number of RDS instance. Obtain it from the basic information of the RDS instance. For more information, please see Connect RDS Instance |

| db_name | Name of database which contains data to import. For how to create Relational Database Service (RDS) instance, please see Create database. |

| table_name | Name of the data table, which contains data to import. If you need to create a data table, please log in to the RDS instance. For more information, please see Connect RDS Instance. |

| --username and --password | Account, and password of database which contains data to import. Obtain information from the RDS instance. For more information, please see Manage Database Accounts. |

| --hbase-table | Name of target data table for data import, which is the name of the data table in HBase. |

| --column-family | Column family of the target data table, which is the column family of data table in HBase. Note: sqoop specifies one column family at a time. |

| --hbase-row-key | Field used as hbase row key in the RDS data table containing the data sources. |

Import Data from Relational Database Service (RDS) to Hive

- Use SSH to connect to the master node. For more information, please see SSH Connection to Cluster.

- Enter command: su hdfs. Switch to HDFS user.

-

Three types of data import to Hive by Sqoop:

-

If Hive has no data table with the same name as Relational Database Service (RDS), you can execute the command in the following format:

sqoop import --connect jdbc:mysql://address:port/db_name --table table_name --username XX --password XX --hive-import

Example:

sqoop import --connect jdbc:mysql://mysql56.rdsmiy5q77jfaqp.rds.bj.baidubce.com:3306/sqoop --table test --username sqoop --password sqoop_123 --hive-import

-

By default, sqoop sets the table name in the hive as the name of the data table used to import data. If you do not want default table name, you can use --hive-table to specify new data table, and execute the command in the following format: sqoop import --connect jdbc:mysql://address:port/db_name --table table_name --username XX --password XX --hive-import --hive-table XX

Example: sqoop import --connect jdbc:mysql://mysql56.rdsmiy5q77jfaqp.rds.bj.baidubce.com:3306/sqoop --table test --username sqoop --password sqoop_123 --hive-import --hive-table sqoop_hive

-

If Hive has data table with the same name as Relational Database Service (RDS), you need to use --hive-table to specify data table, add --hive-overwrite parameters, and execute the command in the following format:

sqoop import --connect jdbc:mysql://address:port/db_name --table table_name --username XX --password XX --hive-import --hive-table XX --hive-overwrite

Use another table test_export in RDS to specify the table sqoop_hive which exists in hive, example:

sqoop import --connect jdbc:mysql://mysql56.rdsmiy5q77jfaqp.rds.bj.baidubce.com:3306/sqoop --table test_export --username sqoop --password sqoop_123 --hive-import --hive-table sqoop_hive -- hive-overwirte。

-

-

You can view execution outcomes in Hive. The example of execution outcome is as follows:

hive>select * from test; OK 1 xiaoming 1 shanghai lanqiu 2 xiaohong 2 beijing pingpong

| Parameters | Parameter Description |

|---|---|

| address and port | Address and port number of RDS instance. Obtain it from the basic information of the RDS instance. For more information, please see Connect RDS Instance |

| db_name | Name of database which contains data to import. For how to create Relational Database Service (RDS) instance, please see Create database. |

| table_name | Name of the data table, which contains data to import. If you need to create a data table, please log in to the RDS instance. For more information, please see Connect RDS Instance. |

| --username and --password | Account, and password of database which contains data to import. Obtain information from the RDS instance. For more information, please see Manage Database Accounts. |

| --hive-import | Import data to hive. |

| --hive-table | Name of target data table for data import, which is the name of the data table in Hive. |

| --hive-overwrite | Overwrite data table with the same name as Relational Database Service (RDS) in Hive. Note: Data import can be incorrect in case of transfer of non-INT type to INT type. |