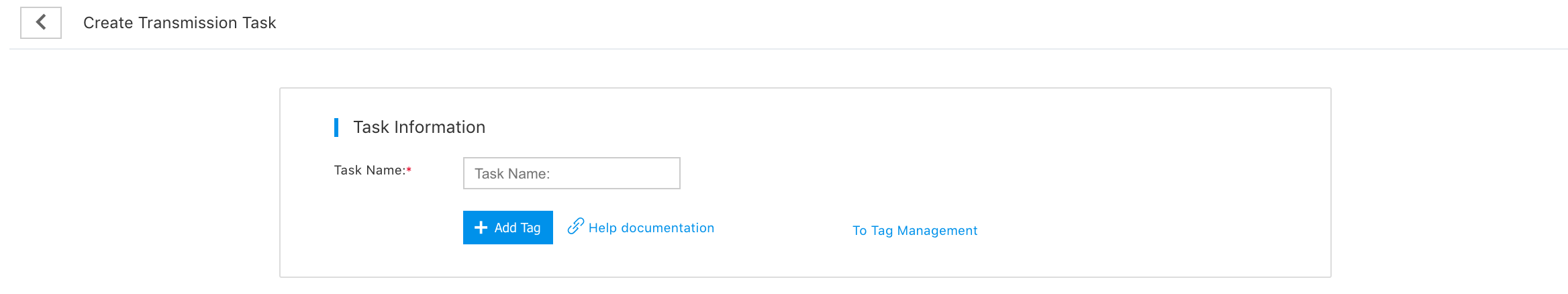

Create a Transmission Task

Task Information

1.Click “Transmission task” in the Baidu Log Service page to enter the “Transmission task” page, and then click “Create Transmission Task” to enter the corresponding page for “Create Transmission Task”.

2.Enter task name in the “Task Information” field.

3.Add a table label to facilitate type management and search.

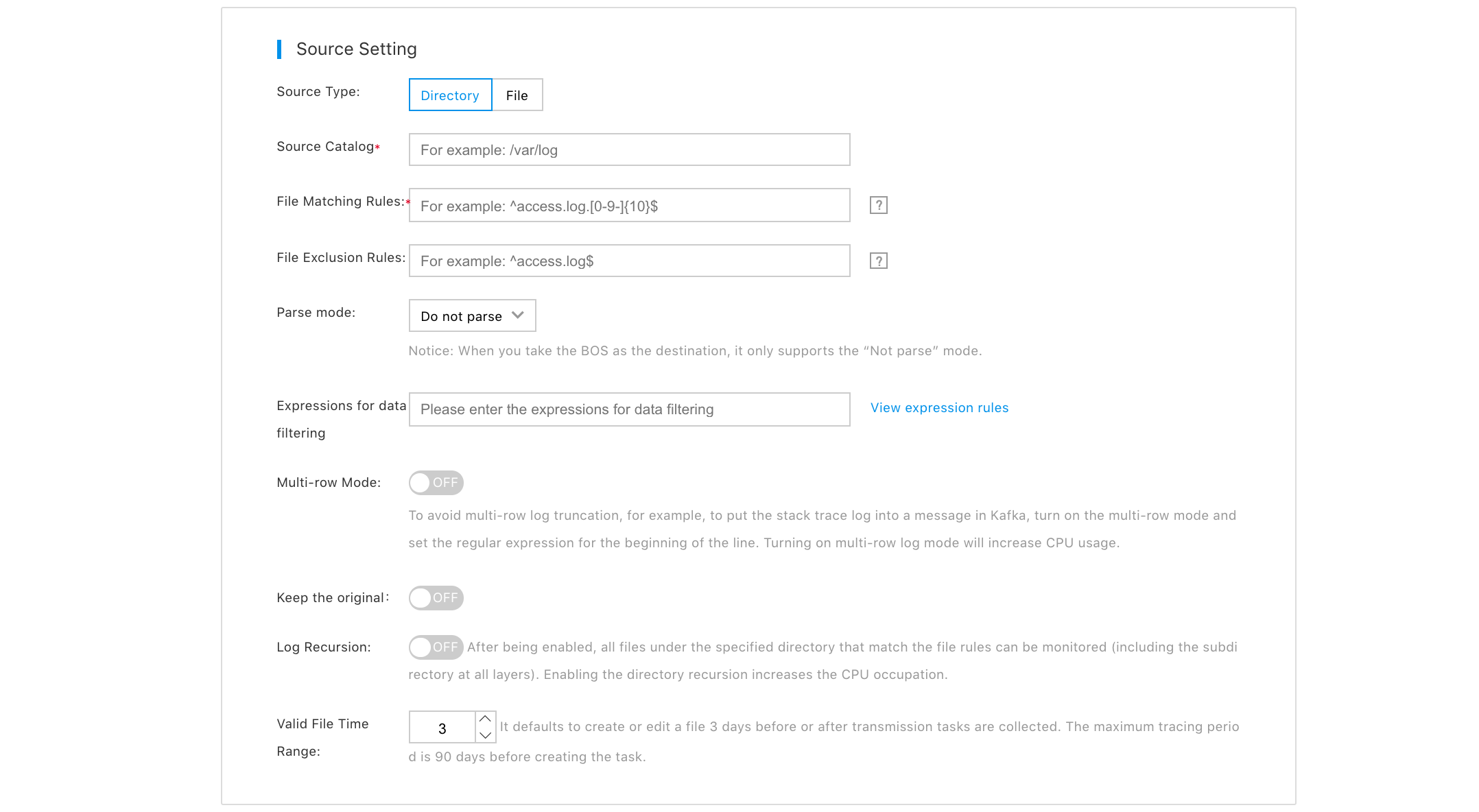

Source Setting

4.Add the source setting. The product has two types of sources, i.e., “Directory” and “Files”, which are different in the following aspects: Directory: Be applicable for collection of off-line log data and off-line files under the selected directory. Files: Be applicable for collection of on-line files where data are increased continuously. When BOS is as the destination, the system performs data collection once every 5 minutes by default; when KAFAK\BES is as the destination, the system performs data collection in real time. The detailed configuration information of both sources is as follows.

“Directory” as Source

In case the type of source is “Directory”, the source has the following configuration items:

- Source log directory: Enter the source directory of logs collected. “Directory” supports golang Glob pattern matching. For more details, see Directory Rules.

- Rules for matching files: By entering regular expression, files matching the regular expression is monitored and collected. Regular expression examples: If the regular expression for “Matching the end position of the input string” is “$”, “. tmp$” indicates all file names ending with “.tmp”.

- Rules for excluding files: If regular expression is entered, files matching the regular expression are neither monitored nor collected, but the log files that are being written should be excluded; otherwise; it may result in transmission abnormality.

- Parsing mode: “Non-parsing”, “JSON mode”, “Delimiter Mode” and “Regular expression” modes are provided.

- Non-parsing: This mode is used to collect and transmit original log data without parsing of the data.

- JSON mode: This mode is used to collect log data in JSON format and supports JSON-nested parsing.

- Delimiter mode: This mode parses the data into JSON format by using specified delimiters. Supported delimiters include space, tab, vertical line, comma, or even custom delimiters which come with no restrictions in their formats. The parsed data is displayed as “Value” in the “Parsing result”. The users are required to enter a custom “Key” to the “Value”.

- Whole regular expression mode: First, enter a log data sample and then a regular expression; then, click the “Parse” button, so the log data sample is parsed according to the regular expression you just entered. The parsed data is displayed as “Value” in the “Parsing result”. The users are required to enter a custom “Key” to the “Value”.

-

Data filtering expression: Collect all data if it is not filled in, and collect only log data that meets the requirements of the expression if it is filled in. The syntax supported by expressions is as follows:

- The field Key is represented by $key.

- Among the logical operators, string type fields support =,! =; Numerical fields support =,! =, >, <, >=, <=; Boolean type fields support! ; Support &&, ||, (,) between fields.

- If special symbols such as operators, parentheses, and $ appear in the regular expression, they must be enclosed in double quotation marks ""

Sample: `($level = "^ERROR|WARN$" || $ip != 10\.25.*) && (($status>=400) || !$flag) && $user.name = ^B.*` - Discard the log data that is failed to parse: When enabled, the log data that is failed to parse is discarded automatically; when it is disabled,and failure to parse the log data occurs, the original log data is transmitted to downstream services. “Non-parsing” mode doesn’t support this option.

- Directory recursion: It is disabled by default; when enabled, all files meeting the matching rules under the source log directory are transmitted. Note: If the log files under different subdirectories have the same name, and the log delivery destination is BOS, the BOS aggregates the log files with the same name.

- Multi-line mode: If your log has multiple lines, enable multi-line mode and set regular expression at the beginning of line. The system takes this regular expression as the separation identifier of each log.

- Effective time range for files: By default, for files that are created or edited 1 day before the creation of the collection and transmission task and after the creation, it can be traced back to a maximum of 7 days before the creation of the task.

“File” as Source

When the source type is “File”, the source has the following configuration items:

- Real-time absolute path of log: Enter the source file path where logs are collected. The path supports golang glob pattern matching.

- Regular expression of rotated log file name: If your log file is rotated, enter the regular expression of rotated log file name to prevent omission of partial data due to rotation.

- Real-time absolute path of log: Enter the source file path by which logs are collected.

- Regular expression of rotated log file name: If your log file is rotated, enter the regular expression of rotated log file name to prevent omission of partial data due to rotation.

- Parsing mode: “Non-parsing”, “JSON mode”, “Delimiter mode” and “Regular expression mode” are provided.

- Non-parsing: This mode is used to collect and transmit original log data without parsing of the data.

- JSON mode: This mode is used to collect log data in JSON format and supports JSON-nested parsing.

- Delimiter mode: This mode parses the data into JSON format by using specified delimiters. Supported delimiters include space, tab, vertical line, comma, or even custom delimiters which come with no restrictions in their formats. The parsed data is displayed as “Value” in the “Parsing result”. The users are required to enter a custom “Key” to the “Value”.

- Whole regular expression mode: First, enter a log data sample and then a regular expression; then, click the “Parse” button, so the log data sample is parsed according to the regular expression you just entered. The parsed data is displayed as “Value” in the “Parsing result”. The users are required to enter a custom “Key” to the “Value”.

- Multi-line mode: If your log has multiple lines, enable multi-line mode and set regular expression at the beginning of line. The system takes this regular expression as the separation identifier of each log.

- Discard the log data that is failed to parse: When enabled, the log data that is failed to parse is discarded automatically; when it is disabled,and failure to parse the log data occurs, the original log data is transmitted to downstream services. “Non-parsing” mode doesn’t support this option.

- Effective time range for files: The optional time range varies from 1 to 7 days and the default value is 1 day, i.e., for files that are created or edited 1 day before creation of the collection and transmission task and after the creation, it can be traced back to a maximum of 7 days before the creation of the task.

Destination Setting

5.You can set the log data delivery destination in “Destination setting”. The product provides three types of destination, i.e. KAFKA, BOS and BES, each corresponding to specific parameter configuration as follows:

“KAFKA” as Destination

- Kafka topic: Select the Kafka topic that has been created.

- Data discard: It is disabled by default; when it’s enabled, a single message with a size more than 10M is discarded.

- Data compression: Select whether to enable log file compression before transmission. It is disabled by default. If you want to enable it, select a compression algorithm. The features of various compression algorithms are as follows:

- Gzip: It features high compression ratio, and ability to save space effectively; however, it has slow compression speed and consumes more CPU resources than Snappy and Lzop.

- Snappy: It features faster compression speed and lower compression ratio than Gzip.

- Lzop: It features a quick compression speed which is a little slower than Snappy, and has compression ratio which is a little higher than Snappy.

- Partitioner type: It is a random type by default. You can select "Hash by Value" for message deduplication.

- message key: No message key is available by default; it can be changed to "Source CVM Server HostName" or "Source CVM Server IP".

- Transmission rate: When data compression is turned on, the data transmission rate is limited to 1MB/s; when data compression is off, the data transmission rate is limited to 10MB/s.

“BOS” as Destination

When “BOS” is selected as log delivery destination, you can deliver off-line log and the system can generate a log file on BOS every 15 minutes for the logs collected. Configure the following parameters:

- BOS path: Select BOS path as the destination address to store logs.

- Log aggregation: When the source is a directory, you can choose "Aggregate by time" or "Aggregate by CVM server"; when the source is a file, you can choose "Aggregate by CVM server name” only.

- Aggregate by time: You need to select the time stamp of the source log file (such as “YYYY-MM-DD"), and the aggregation method, i.e., aggregation by day or hour, or custom aggregation. Among them, when you choose to aggregate the logs “custom aggregation”, you can set the date wildcard character according to the prompt given on the right, so that the system aggregates the logs in the BOS path you specify according to the date wildcard character you have defined.

- Aggregation by CVM server: You should choose to aggregate according to CVM Server IP or CVM Server name.

- Transmission rate: It is 1M/s by default and adjustable to 100M/s.

- Data compression: Select whether to enable log file compression before transmission. It is disabled by default. If you want to enable it, select a compression algorithm. The features of various compression algorithms are as follows:

- Gzip: It features high compression ratio, and ability to save space effectively; however, it has slow compression speed and consumes more CPU resources than Snappy and Lzop.

- Snappy: It features faster compression speed and lower compression ratio than Gzip.

- Lzop: It features a quick compression speed which is a little slower than Snappy, and has compression ratio which is a little higher than Snappy.

- Transmission notification: The transmission notification feature, which is disabled by default, is supported only when the source is a directory type. After the transmission notification is enabled, the system generates an empty file with a file name ending with a ".done" suffix in the destination path of the BOS after the corresponding file is transmitted, so that downstream services can be started according to the mark, which are shown as follows:

BES as Destination

You can deliver real-time log when you choose “BES” as the log delivery destination. Configure the following parameters:

- Select BES cluster: Select the BES cluster that has been already created in the current region.

- User name: Enter the login name of the BES cluster selected.

- Password: Enter the password of the BES cluster selected.

- Test connectivity: Test and verify whether the selected BES cluster can be connected. If not, the transmission task cannot run normally.

- Index prefix: The index prefix is user-defined. When index rolling is enabled, the index name in the BES cluster is consisted of "index prefix and collection date”; when disabled, the index name is consisted of the index prefix only. The collection date refers to the date when the data was written into the BES, and its format is: YYYY-MM-DD.

- Index Rolling: It is disabled by default and is used to set the frequency at which the BES cluster automatically generates indexes; when enabled, indexes are generated at the set frequency and the collected log data is written to the new index.

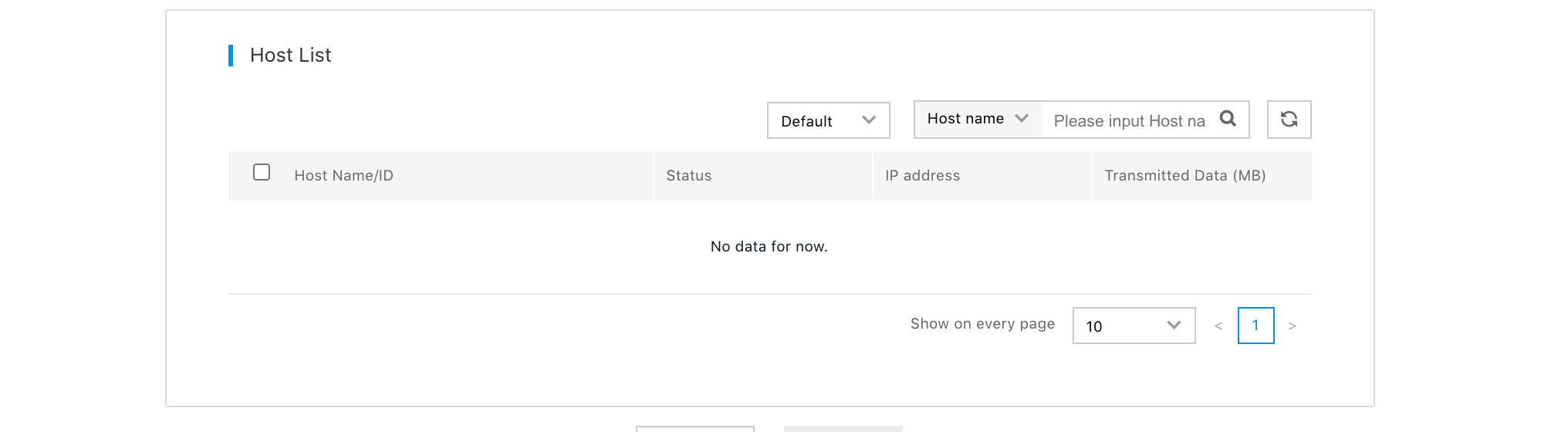

Select a CVM Server

6.Select the CVM Server on which the collector is installed, so that the collector server is able to issue a transmission task to the selected CVM server. As shown in the figure below, the list shows the CVM server where a collector is installed in the current region and is not in the "lost" status.

7.Click "Save" to finish creation of the log transmission task. The transmission task will take effect after about 1 minute.