Create Delivery Task

Updated at:2025-11-03

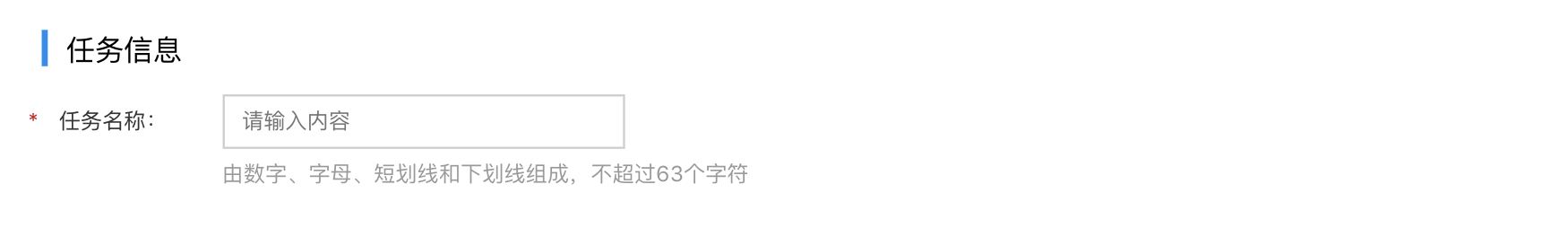

Task Information

- On the Log Service page, click "Logshipper" to open the Logshipper List page. Then, click "Create Logshipper" to access the Create Logshipper page and configure the settings.

- In the Task Information section, enter the task name.

General delivery configuration

Enter your desired original logstore, delivery destination, compression type, interval, field definition and other contents in the delivery configuration column.

- LogStore: Choose the LogStore to deliver from the dropdown menu. You can also search by LogStore name.

- Log start time: Set the desired start time for the logshipper, down to the second level. The default is the current time.

-

Delivery destination: Support BOS and Kafka

-

BOS:

-

BOS path: Select the target BOS bucket path

- Created bucket: If the bucket has already been created in the BOS console, select it from the dropdown menu. (If it does not appear, verify that the bucket and the delivered LogStore are in the same region.)

- No bucket created: If a bucket hasn't been created yet, click "Create Bucket" in the dropdown menu to create one.

- Disk partition format for time: You can define the time partition format, supporting minute-level granularity. The default format is %Y/%m/%d/%H/%M/.

- Disk partition for logstream: When the disk partition for logstream is enabled, the logstream becomes the partition path for BOS. Files delivered to BOS are stored under the path %Y/%m/%d/%H/%M/%logStream.

- Delivery size upper limit: If a delivery size limit is set, log files exceeding this limit will be split into multiple parts to control the Object size in BOS.

-

-

Kafka:

- Cluster: Select an existing cluster or redirect to Kafka to create a cluster (Note: The cluster needs to enable the product transfer switch in the Kafka product console)

- Topic: Select the topic of the cluster chosen above

-

-

Compression type (only supported for BOS delivery destination): Choose whether to enable the function of compressing log files before delivery. It is disabled by default. If it is enabled, select a compression algorithm. The characteristics of each algorithm are as follows:

- Gzip: Offers a high compression ratio that effectively saves space but has slower compression speeds and higher CPU usage when compared to Snappy and Lzop.

- Snappy: Provides faster compression speeds but a lower compression ratio than Gzip.

- Lzop: Offers high compression speed and slightly better compression ratios compared to Snappy.

- Bzip2: Has a high compression speed, faster than Gzip, but offers a lower compression ratio.

- Field definition: If Retain Original Text is enabled, the original text will be delivered; if not enabled, BLS will fill the resolved kv pairs as the original text for delivery; if not resolved, BLS will deliver the unresolved logs as the original text.

-

Storage format configuration: When key-value pairs are selected for field definition, they can be saved in different formats, including JSON, Parquet and CSV. The configurations for each format are as follows:

- JSON Format: Data will be delivered to BOS and Kafka in JSON format without additional configuration

- Parquet format: Data will be delivered to BOS in Parquet format without additional configuration (only supported for BOS delivery destination)

- CSV format:

- Delimiter: Supports delimiters such as spaces, tabs, vertical bars, and commas. You can also define custom delimiters of up to three characters.

- If a field includes delimiter characters, enclose it in quotes to avoid incorrect splitting.

- Field Names: When enabled, the CSV file will include the field names.

- Delivery interval: The logshipper is triggered based on the system time interval, which can be set between 5 and 60 minutes.