Use DTS to Implement Data Migration from Self-built SQL Server to Baidu AI Cloud RDS for SQL Server

1. Application Scenarios

This article applies to using Baidu AI Cloud data transmission service (DTS) to migrate the data of the self-built SQL Server or Baidu AI Cloud RDS for SQL Server database instance into the self-built SQL Server or Baidu AI Cloud RDS for SQL Server.

2. Restrictions on Using SQL Server Database as Source

2.1 Restrictions on structural migration tasks

- The SQL Server→SQL Server homogeneous database supports the structural migration of table, view, custom data type, function, storage process, and triggers.

2.2 Restriction on full data migration tasks

- If the primary key or unique constraint does not exist for the table to be migrated in the source database and all fields are not unique, there may be duplicate data in the target database.

- The data types MONEY and SMALLMONEY only support the last two decimal places.

2.3 Restriction on incremental data migration tasks

-

The SQL Server incremental migration relies on the SQL Server CDC feature, so that you need to start the CDC feature first. For details, refer to the official website: About Change Data Capture (SQL Server).

- SQL Server needs the support of the SQL Server proxy service to enable the CDC feature.

- CDC requires the exclusive use of the cdc architecture and cdc users. If the CDC architecture or database user exists in a database currently, you cannot enable the change data capture for this database before you delete or rename this architecture or user.

- To execute DDL for the source table with the CDC feature enabled, it can be operated by the member of the sysadmin and database role db_owner or the member of database role db_ddladmin only.

- The account used to enable the database-level CDC feature must be a member of the role sysadmin. The account used to enable the table-level CDC feature must be a member of the role sysadmin or db_owner.

- The incremental change of the column set is not supported.

- The incremental change of the computed column is not supported.

- The data types sql_variant, cursor, and table are not supported.

- The DELETE operation for the incremental migration of tables without primary key is not supported.

- The data types MONEY and SMALLMONEY only support the last two decimal places.

2.4 Privilege of Database Account

Note: If you have an account with the sysadmin server role, it is not restricted by the following privileges, such as sa account.

2.4. 1 SQL Server as Source

| Database | Structural Migration and Full Migration | Incremental Migration |

|---|---|---|

| Self-built SQL Server | db_datareader permission at least | db_owner permission at least |

2.4 SQL Server as Destination

| Database | Structural Migration, Full Migration, and Incremental Migration |

|---|---|

| Baidu AI Cloud RDS for SQL Server | At least db_owner (read-write) permission |

3. Preparations

3.1 Data Source Preparation

This article takes the migration from the self-built database in the public network to Baidu AI Cloud RDS as an example. The migration object is mssql_test.dbo.table1/mssql_test.dbo.table2/mssql_test.dbo.table3 and the migration type is structural/full/incremental. The data source preparation is as follows:

- Self-built SQL Server Instances

- Baidu AI Cloud RDS for SQL Server 2012 Instances

For other requirements for the source and destination instances, refer to the document: Take SQL Server as the source and SQL Server as the target

3.2 Preparation for taking the self-built SQL Server on the public network as the source

3.2 Authorization of Database Migration Account

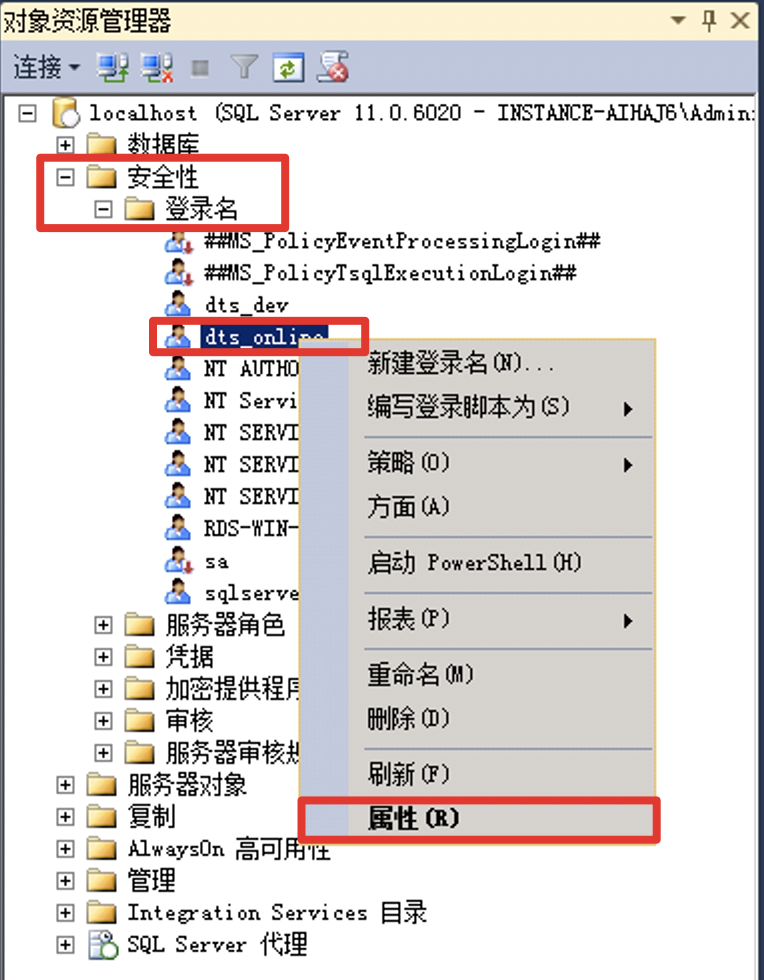

- In the "Object resource manager" on the left of SSMS, open the "Login name" in the "Security" in the list, right-click the account to be migrated, and then click "Properties", such as "dts_online", as shown in the figure:

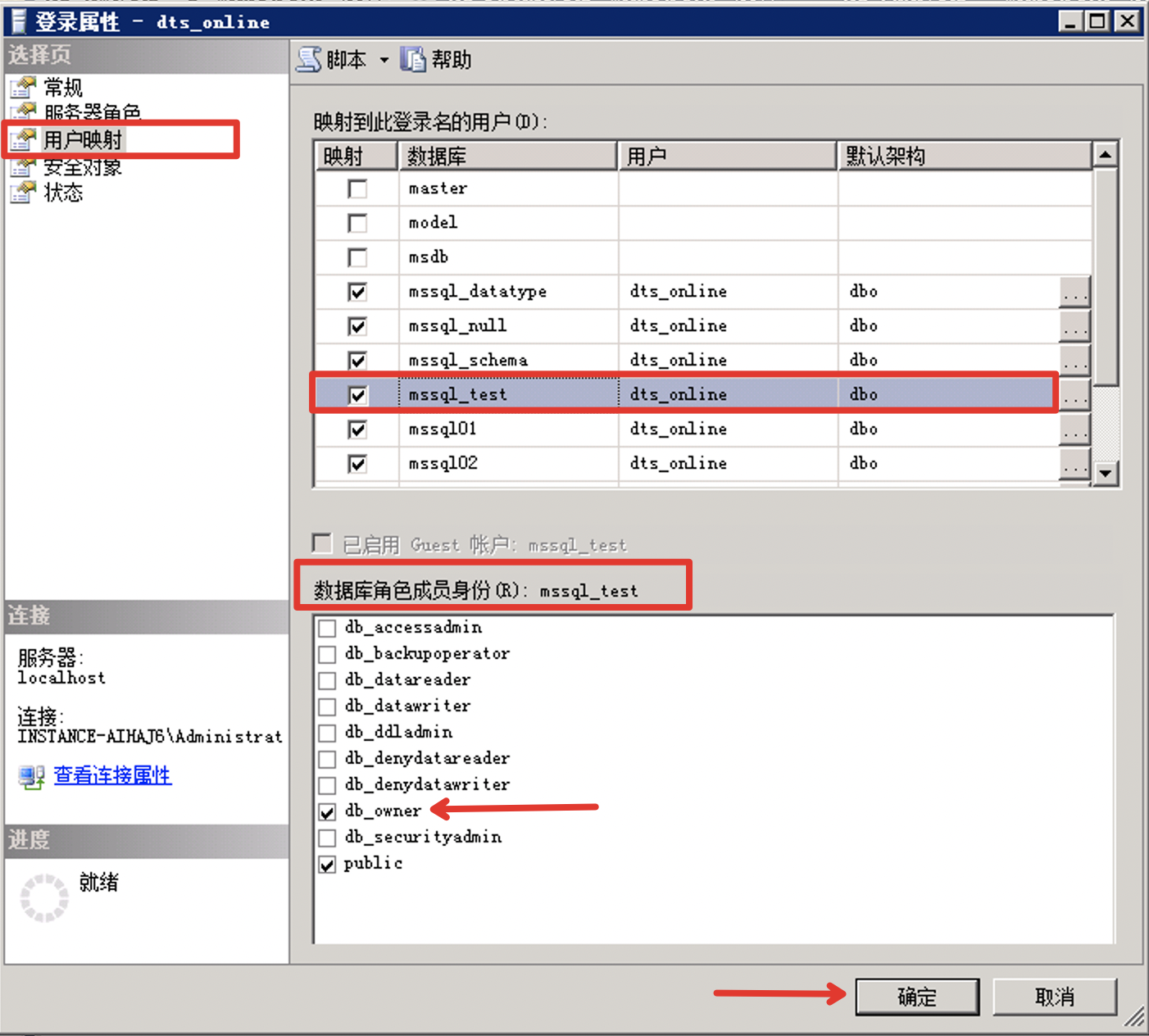

- In the pop-up login properties box, select "User mapping" on the left, and click the database to view the account permissions of the database. Then, you can select the account permissions of the database, such as read and write permission "db_owner". After the setting is complete, click [OK].

3.2.2 Preparation for enabling the CDC during the incremental migration from the source

Note: If you do not select the incremental migration, you can ignore the preparations in Section 3.2.2

1. Start the database-level CDC feature

-- Start the database-level CDC command.

EXEC sys.sp_cdc_enable_db;

- Check if the startup is successful

SELECT name,is_cdc_enabled FROM sys.databases WHERE name = 'DB_name'If you encounter the following error:

Could not update the metadata that indicates database DB_name is enabled for Change Data Capture. The failure occurred when executing the command 'SetCDCTracked(Value = 1)'. The error returned was 15404: 'Could not obtain information about Windows NT group/user 'RDS-WIN-TEST\Administrator', error code 0x534.'. Use the action and error to determine the cause of the failure and resubmit the request.Execute the following command and then re-execute the command of starting the database-level CDC again:

ALTER AUTHORIZATION ON DATABASE::[DB_name] TO [sa]

Or

EXEC sp_changedbowner 'sa'2. Start the table-level CDC feature for each table that needs to be subject to the incremental migration

-- Start the table-level CDC command.

EXEC sys.sp_cdc_enable_table

@source_schema= N'schema_name',- The name of the architecture to which the source table belongs, which has no default value and cannot be NULL.

@source_name = N'table_name', - The name of the source table, which has no default value and cannot be NULL.

@role_name = NULL,-- It is recommended to set it to NULL, which is the name of the database role used to access the changed data. It must be specified. If it is explicitly set to NULL, no control role is available for restricting the access to the changed data. It can be an existing fixed server role or database role. If the specified role does not exist, the system will create a database role with this name automatically.

@capture_instance = DEFAULT,-- The name of the capture instance used to name the capture object of the instance-specific change data. Also, it cannot be NULL, the source table can have up to two capture instances.

@supports_net_changes = 0,-- Whether to enable the support for querying the net change for this capture instance. The default value is 1. If supports_net_changes is set to 1, you must specify index_name. Otherwise, the source table must have a defined primary key.

@index_name = NULL,-- The name of the unique index that uniquely identifies the row in the source table, which can be NULL. If not used, CDC uses the primary key. If the table does not have a primary key, the primary key added later is ignored.

@captured_column_list = NULL,-- Identify the source table columns to be included in the change table. NULL means all columns are included in the change table. For the list with a comma-separated column name, you can select to put a single column name of the list into the double quotation marks ("") or square brackets ([]), which cannot contain the following reserved names: __$start_lsn, __$end_lsn, __$seqval, __$operation, and __$update_mask.

@filegroup_name = DEFAULT, - The file group to be used for the change table created for the capture instance. If this parameter is NULL, the default file group is used. It is recommended to create a separate file group for the change table for the change data capture.

@allow_partition_switch = 1 – Whether to execute the SWITCH PARTITION command of the ALTER TABLE on tables with the change data capture enabled. The default value is 1. For non-partitioned tables, this switch is always set to 1, and the actual setting is ignored.If you encounter the following error:

The message 22832, level 16, status 1, process sp_cdc_enable_table_internal, and line 623.

Cannot update the metadata to indicate that the change data capture is enabled for the table [aqadmin].[AQ_TEST_1]. The change data capture fails during the execution of the command'[sys].[sp_cdc_add_job] @job_type = N'capture''. The returned error is 22836: 'Cannot update the metadata of the database aq11 to indicate that a change data capture job has been added. The change data capture fails during the execution of the command 'sp_add_jobstep_internal'. The returned error is 14234: 'The specified @server is invalid. The valid value is returned by sp_helpserver. '. Use this action and error to determine the cause of the failure and resubmit the request.'. Use this action and error to determine the cause of the failure and resubmit the request.After the installation of the SQL Server, the host name is subject to modification, resulting in the inconsistency in the "servname" in the two statement results.

Execute the following command and then re-execute and start the table-level CDC command:

IF serverproperty('servername')<>@@servername

BEGIN

DECLARE @server SYSNAME

SET @server=@@servername

EXEC sp_dropserver @server=@server

SET @server=cast(serverproperty('servername') AS SYSNAME)

EXEC sp_addserver @server=@server,@local='LOCAL'

PRINT 'ok!'

END

ELSE

PRINT 'undo!When you start the table CDC feature for the first time, it prompts you to start two jobs, i.e., capture job and cleanup job.

3. Modify the execution cycle of the cleanup job (optional)

By default, the captured incremental data will be cleaned up after 4320 minutes. You can modify the retention time of the incremental data. For example, the following command changes the retention time to 129600 minutes.

EXECUTE sys.sp_cdc_change_job

@job_type = N'cleanup',

@retention = 129600;

-- Restart

EXECUTE sys.sp_cdc_start_job

@job_type = N'cleanup';4. Modify the cycle of the capture job to read the transaction log (optional)

The default interval is 5 seconds. The longer the interval, the longer it takes to record the incremental data in the change table. Thus, you can adjust the interval time appropriately. For example, the following command changes the interval time to 1 second.

EXECUTE sys.sp_cdc_change_job

@job_type = N'capture',

@pollinginterval = 1;

-- Restart

EXECUTE sys.sp_cdc_start_job

@job_type = N'capture';Preparation for Baidu AI Cloud RDS for SQL Server as the data destination

3.3 Authorization of Database Migration Account

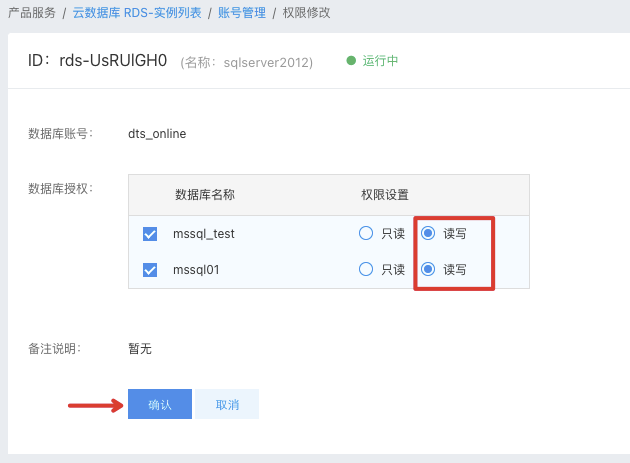

- After entering the details page of the destination instance to be migrated, click "Account Management" and click "Create Account". Create an account with "Read and write" permissions for the corresponding database. For example, create an account "dts_online" and grant read and write permissions (db_owner) to the destination database "mssql_test", and finally click [OK]. If there is already a migration account, ignore this step.

- If a migration account already exists, click [Modify permissions] in the account list, add read and write permissions for the destination database to be migrated, and finally click [OK].

3.3.2 Enable the access to the Baidu AI Cloud database via the public network

- Log in to Baidu AI Cloud to access the console and click the instance name to enter the details page of the destination instance to be migrated;

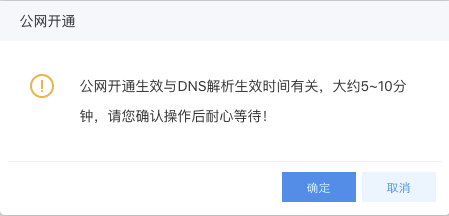

- After entering the instance details page, click [Enable] in the public network access option, and then click [OK] after a confirmation box for enabling the public network pops up.

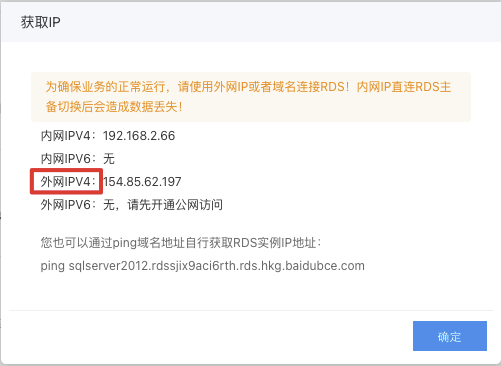

- After the successful enabling, you can see that the public network access status changes to "Enabled" on the details page. Click "Get IP" to view the external network IPV4, which can be used to configure DTS tasks later.

3.3.3 Create a Baidu AI Cloud database to be migrated

- After entering the details page of the destination instance to be migrated, click [Database management] and click [Create Database].

- For example, when you migrate the instance to the destination database "mssql_test" from the cloud, you need to create the corresponding database name manually; that is, a database already exists in Baidu AI Cloud RDS. Click OK.

4. Create Baidu AI Cloud DTS Migration Task

4.1 Configuration of source library and destination library

- Log in to Baidu AI Cloud to access the console, create a data migration instance, select "Self-built data storage" on the source and destination, click [Next] and pay for the purchase;

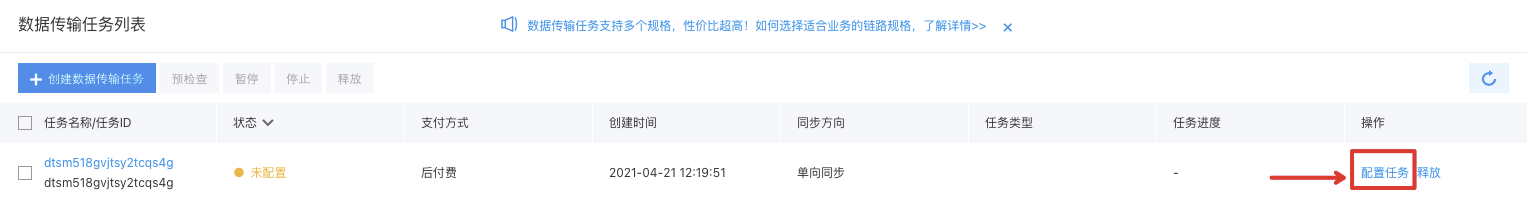

- Click the “Configure Task” button to start the configuration of migration task for the instance;

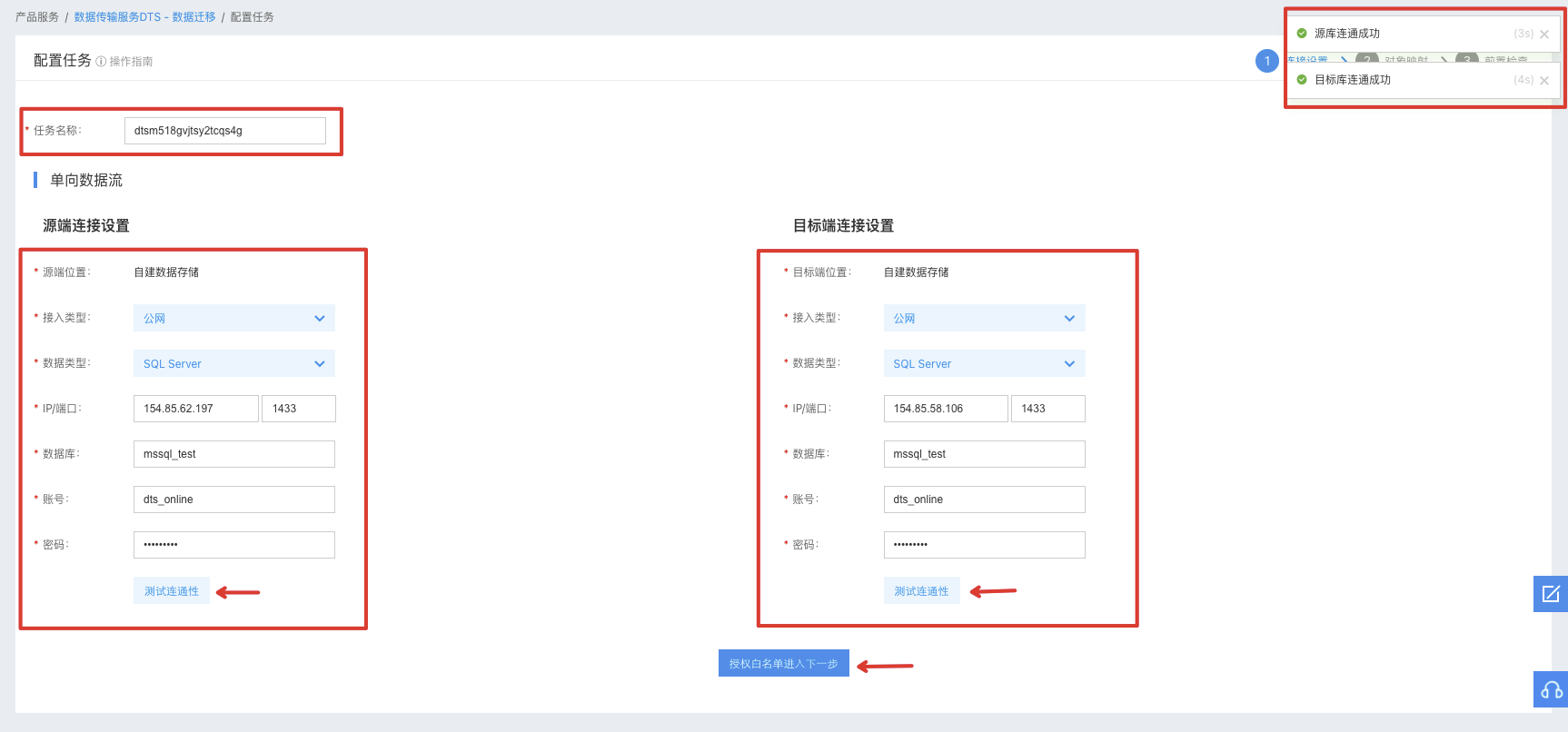

- Enter the task name as required. In the source connection settings, select [Public network] as the access type and "SQl Server" as the data type. Then, enter the connection information of the source self-built SQL Server instance. In the destination connection settings, select [Public network] as the access type and "SQl Server" as the data type, and then enter the connection information of the destination Baidu AI Cloud RDS for SQL Server instance. After entering the connection information, click "Test connectivity" so that a successful connection message pops up in the upper right corner.

- Click the “Authorize Whitelist and Go to Next” button to continue to configure migration type and library table.

4.2 Migration type and library table

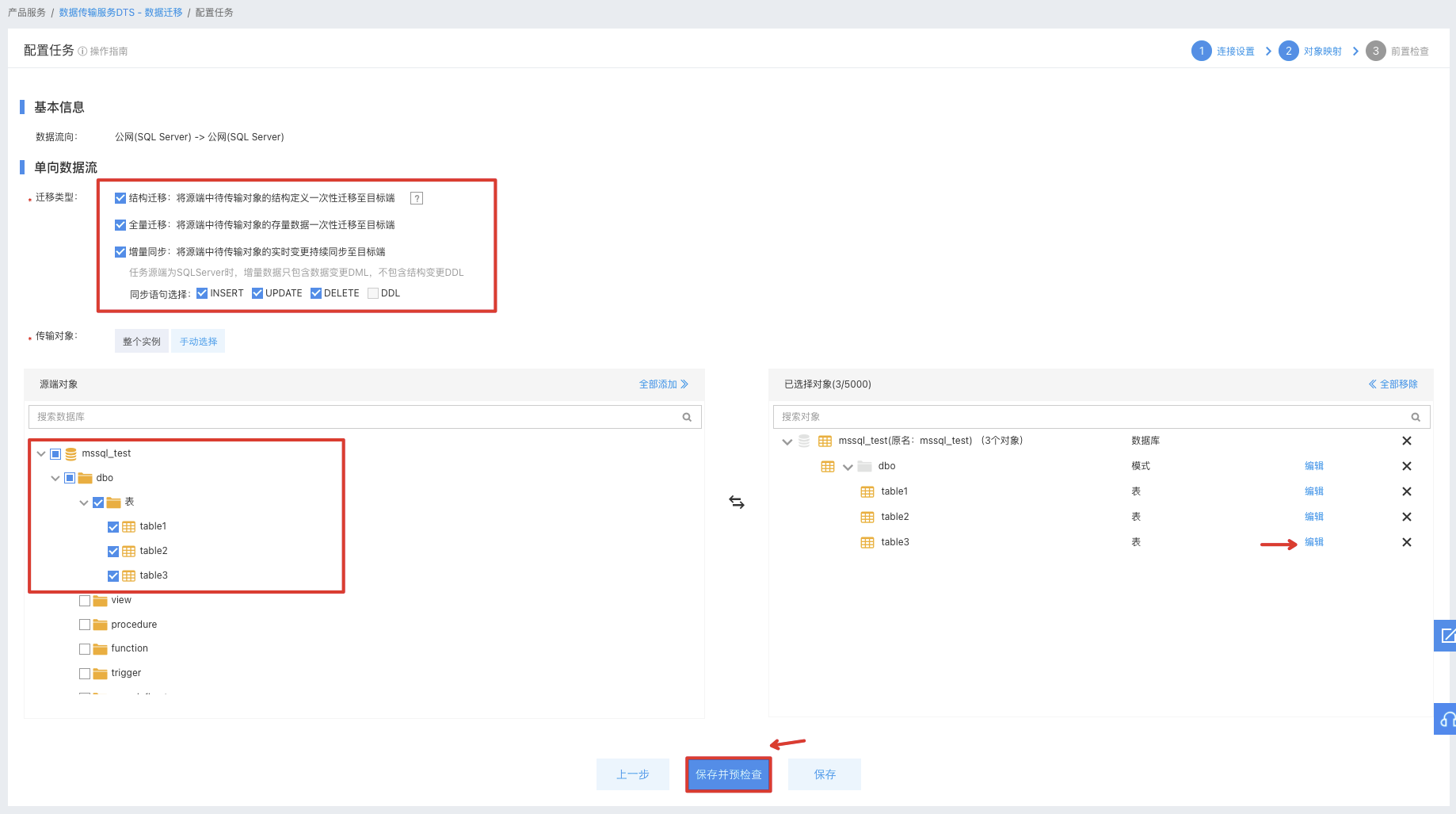

- According to the migration requirements, check the migration type: Structural migration, full migration, and incremental migration; Note: Before the incremental migration, you need to enable the CDC feature on the database table. For details, refer to Section 3.2.2 of this document.

- You can select the object to be migrated in the source library object and view the selected object on the right. To change the name of the migrated object in the target library, you can click the Edit button on the right of the object to rename it. Click “Save and Precheck” to start the precheck.

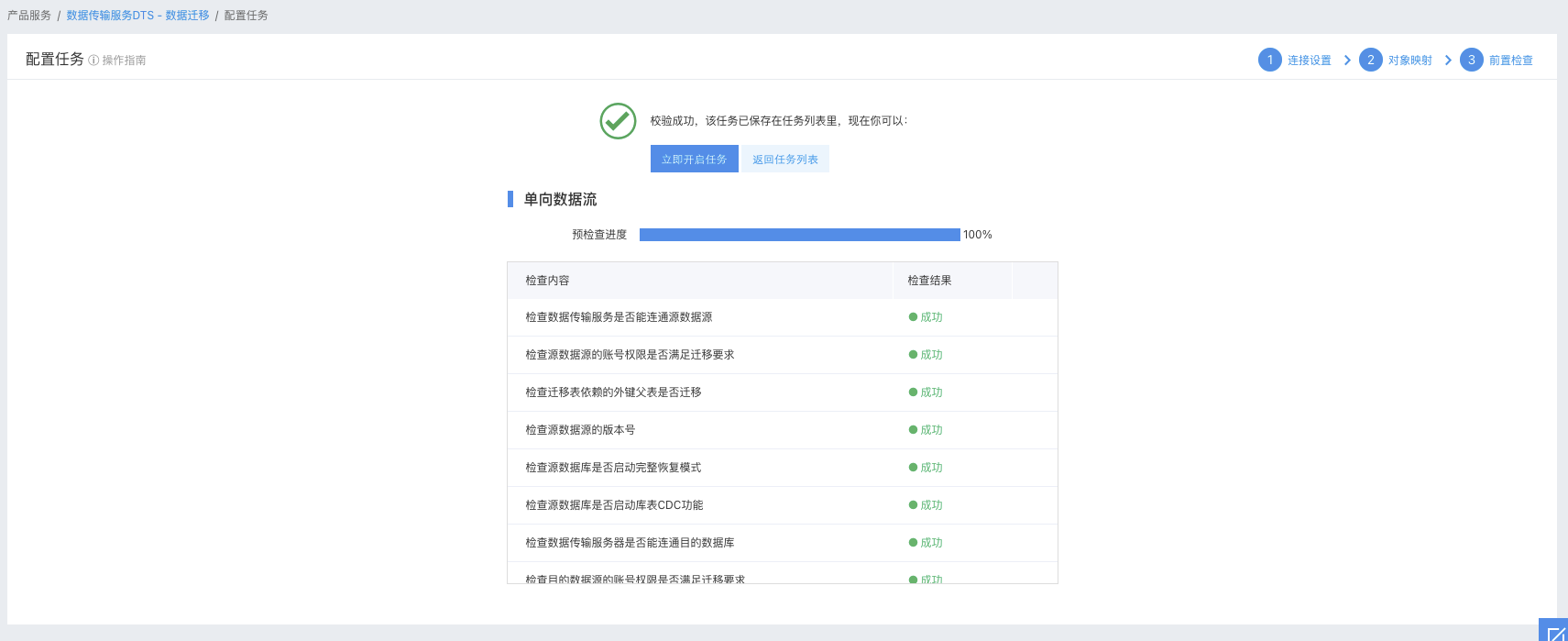

4.3 Task verification and startup

- After a while, the page displays that the task verification is successful.

- Click the “Start task now” button to start the task.