Deploying NGC Environment Based on GPU Instance

Deploying NGC Environment Based on GPU Instance

1. Background Introduction

NVIDIA GPU Cloud (NGC) is the GPU optimized software repository provided by NVIDIA and the third-party ISV, and it is mainly used in AI, HPC, and virtualization fields. It provides many containers, pre-trained models, Helm charts for Kubernetes deployment, and an industry-specific AI toolkit with SDK. Using NGC can simplify the establishment, customization and GPU optimized software integration processes for developers and accelerate the implementation process of the whole development.

Prerequisites:

Users need to register the NGC account: https://ngc.nvidia.com/signin.

Operating Method:

-

Create a GPU instance, please refer to Creating a GPU instance for the operation method.

Note: When you select an instance image, you need to select the system image supported by NGC-Ready. At present, the system images supported by NGC include: Ubuntu 16.04, 18.04, and 20.04 RHEL 7.5 and 7.6

At present, Baidu AI Cloud has provided the system image supported by NGC-Ready. If you need to view the public system image supported by Baidu AI Cloud, please see Here.

- Install the GPU driver for the GPU instance. It is recommended to install the latest version of the GPU driver for the specific operating system. For the installation method of the GPU driver, please see the Usage Steps of Public Image.

- Install Docker and Docker Utility Engine for NVIDIA GPU, i.e., nvidia-docker.

For the installation method of Docker, please see here: Ubuntu, CentOS.

Here, we take Ubuntu as an example. For specific information, please see the link.

The operation sequence for installation includes 1) Prerequisites for installing Docker. 2) Add the official GPG key of Docker. 3) Add an official stable Docker repository.

$sudo apt-get install -y ca-certificates curl software-properties-common

$curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-ey add –

$sudo add-apt-repository “deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable”Install nvidia- docker:

curl -s -L https://nvidia.github.io/nvidia-docker/gpgkey | sudo apt-key add –

distribution=$(. /etc/os-release;echo $ID$VERSION_ID)

curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.list | sudo tee /etc/apt/sources.list.d/nvidia-docker.list

sudo apt-get update

sudo apt-get install -y nvidia-docker2

sudo usermod -aG docker $USER

sudo systemctl daemon-reload

sudo systemctl reload docker- NGC的API key generated

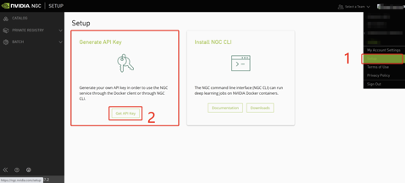

After successful registration of the NGC account, you need to generate the API key of the NGC account. During generation, you need to log in to the NGC page, click "Account Name", and select "Setup" to enter the Setup page, and then click "Get API Key" to enter the Generate API Key page.

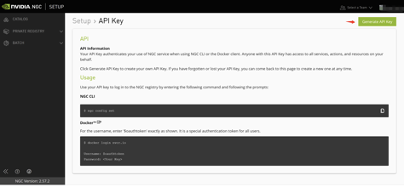

After you enter the page, click "Generate API Key".

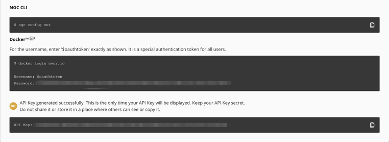

The system asks you to confirm whether you want to generate an API Key. Click "Confirm", and the page becomes similar to the figure below:

It displays a string of password at Password. Users can return to the shell interface of GPU instance by following the operation procedure in the figure above.

$ docker login nvcr.io

Username: $oauthtoken

Password:[Enter the password you generated]-

Use Image in NGC

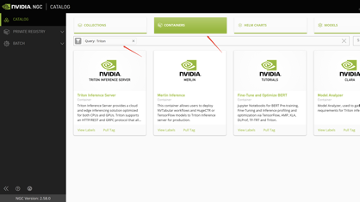

Here, we take Triton as an example:

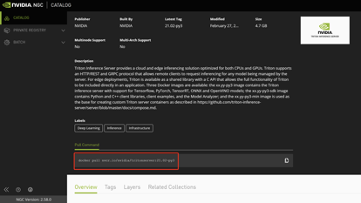

• Enter the CATALOG part of NGC, select the CONTAINERS branch, and then enter the framework name Triton in Query.

• Click the Triton Inference Server page box below, and it displays the introduction of framework and the image pull method.

• You can obtain the latest version of the container image by executing the command displayed in the red box in the figure above, which can be implemented by entering the command in the command line of the GPU instance.

$ docker pull nvcr.io/nvidia/tritonserver:21.02-py3In this way, we can use the framework or software product as a Docker container.